Asserting new open supply contributions to the Apache Spark neighborhood for creating deep, distributed, object detectors – and not using a single human-generated label

This put up is authored by members of the Microsoft ML for Apache Spark Crew – Mark Hamilton, Minsoo Thigpen,

Abhiram Eswaran, Ari Inexperienced, Courtney Cochrane, Janhavi Suresh Mahajan, Karthik Rajendran, Sudarshan Raghunathan, and Anand Raman.

In as we speak’s day and age, if information is the brand new oil, labelled information is the brand new gold.

Right here at Microsoft, we frequently spend loads of our time enthusiastic about “Huge Knowledge” points, as a result of these are the best to unravel with deep studying. Nevertheless, we frequently overlook the rather more ubiquitous and troublesome issues which have little to no information to coach with. On this work we’ll present how, even with none information, one can create an object detector for nearly something discovered on the internet. This successfully bypasses the expensive and useful resource intensive processes of curating datasets and hiring human labelers, permitting you to leap on to clever fashions for classification and object detection fully in sillico.

We apply this method to assist monitor and defend the endangered inhabitants of snow leopards.

This week on the Spark + AI Summit in Europe, we’re excited to share with the neighborhood, the next thrilling additions to the Microsoft ML for Apache Spark Library that make this workflow simple to copy at huge scale utilizing Apache Spark and Azure Databricks:

- Bing on Spark: Makes it simpler to construct functions on Spark utilizing Bing search.

- LIME on Spark: Makes it simpler to deeply perceive the output of Convolutional Neural Networks (CNN) fashions skilled utilizing SparkML.

- Excessive-performance Spark Serving: Improvements that allow ultra-fast, low latency serving utilizing Spark.

We illustrate the way to use these capabilities utilizing the Snow Leopard Conservation use case, the place machine studying is a key ingredient in the direction of constructing highly effective picture classification fashions for figuring out snow leopards from photos.

Use Case – The Challenges of Snow Leopard Conservation

Snow leopards are going through a disaster. Their numbers are dwindling on account of poaching and mining, but little is thought about the way to greatest defend them. A part of the problem is that there are solely about 4 thousand to seven thousand particular person animals inside a possible 1.5 million sq. kilometer vary. As well as, Snow Leopard territory is in a number of the most distant, rugged mountain ranges of central Asia, making it close to unimaginable to get there with out backpacking gear.

Determine 1: Our crew’s second flat tire on the best way to snow leopard territory.

To really perceive the snow leopard and what influences its survival charges, we’d like extra information. To this finish, we’ve teamed up with the Snow Leopard Belief to assist them collect and perceive snow leopard information. “Since visible surveying is just not an possibility, biologists deploy motion-sensing cameras in snow leopard habitats that seize photos of snow leopards, prey, livestock, and anything that strikes,” explains Rhetick Sengupta, Board President of Snow Leopard Belief. “They then must kind via the photographs to search out those with snow leopards to be able to study extra about their populations, conduct, and vary.” Through the years these cameras have produced over 1 million photos. The Belief can use this data to ascertain new protected areas and enhance their community-based conservation efforts.

Nevertheless, the issue with camera-trap information is that the biologists should kind via all the photographs to differentiate images of snow leopards and their prey from images which have neither. “Handbook picture sorting is a time-consuming and dear course of,” Sengupta says. “In truth, it takes round 300 hours per digital camera survey. As well as, information assortment practices have modified through the years.”

We have now labored to assist automate the Belief’s snow leopard detection pipeline with Microsoft Machine Studying for Apache Spark (MMLSpark). This consists of each classifying snow leopard photos, in addition to extracting detected leopards to determine and match to a big database of identified leopard people.

Step 1: Gathering Knowledge

Gathering information is usually the toughest a part of the machine studying workflow. With out a big, high-quality dataset, a undertaking is probably going by no means to get off the bottom. Nevertheless, for a lot of duties, making a dataset is extremely troublesome, time consuming, or downright unimaginable. We have been lucky to work with the Snow Leopard Belief who’ve already gathered 10 years of digital camera lure information and have meticulously labelled hundreds of photos. Nevertheless, the belief can’t launch this information to the general public, attributable to dangers from poachers who use picture metadata to pinpoint leopards within the wild. Consequently, in case you are trying to create your individual Snow Leopard evaluation, it is advisable to begin from scratch.

Determine 2: Examples of digital camera lure photos from the Snow Leopard Belief’s dataset.

Asserting: Bing on Spark

Confronted with the problem of making a snow leopard dataset from scratch, it is laborious to know the place to start out. Amazingly, we need not go to Kyrgyzstan and arrange a community of movement delicate cameras. We have already got entry to one of many richest sources of human information on the planet – the web. The instruments that we’ve created over the previous 20 years that index the web’s content material not solely assist people study concerning the world however may assist the algorithms we create do the identical.

Right now we’re releasing an integration between the Azure Cognitive Providers and Apache Spark that allows querying Bing and lots of different clever providers at huge scales. This integration is a part of the Microsoft ML for Apache Spark (MMLSpark) open supply undertaking. The Cognitive Providers on Spark make it simple to combine intelligence into your present Spark and SQL workflows on any cluster utilizing Python, Scala, Java, or R. Below the hood, every Cognitive Service on Spark leverages Spark’s huge parallelism to ship streams of requests as much as the cloud. As well as, the combination between SparkML and the Cognitive Providers makes it simple to compose providers with different fashions from the SparkML, CNTK, TensorFlow, and LightGBM ecosystems.

Determine 3: Outcomes for Bing snow leopard picture search.

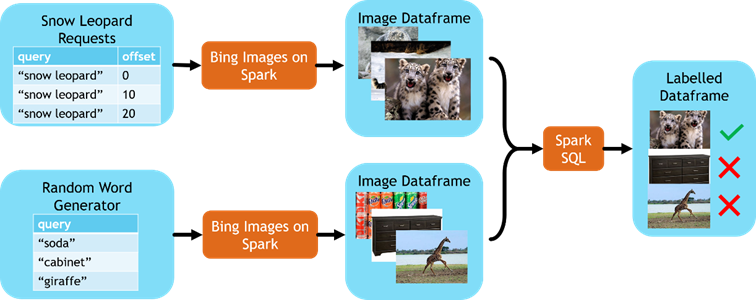

We will use Bing on Spark to shortly create our personal machine studying datasets that includes something we will discover on-line. To create a customized snow leopard dataset takes solely two distributed queries. The primary question creates the “optimistic class” by pulling the primary 80 pages of the “snow leopard” picture outcomes. The second question creates the “unfavorable class” to match our leopards in opposition to. We will carry out this search in two alternative ways, and we plan to discover them each in upcoming posts. Our first possibility is to seek for photos that may appear like the sorts of photos we will probably be getting out within the wild, similar to empty mountainsides, mountain goats, foxes, grass, and so on. Our second possibility attracts inspiration from Noise Contrastive Estimation, a mathematical method used ceaselessly within the Phrase Embedding literature. The essential concept behind noise contrastive estimation is to categorise our snow leopards in opposition to a big and various dataset of random photos. Our algorithm mustn’t solely be capable of inform a snow leopard from an empty picture, however from all kinds of different objects within the visible world. Sadly, Bing Photos doesn’t have a random picture API we might use to make this dataset. As a substitute, we will use random queries as a surrogate for random sampling from Bing. Producing hundreds of random queries is surprisingly simple with one of many multitude of on-line random phrase mills. As soon as we generate our phrases, we simply must load them right into a distributed Spark DataFrame and cross them to Bing Picture Search on Spark to seize the primary 10 photos for every random question.

With these two datasets in hand, we will add labels, sew them collectively, dedupe, and obtain the picture bytes to the cluster. SparkSQL parallelizes this course of and may velocity up the obtain by orders of magnitude. It solely takes a number of seconds on a big Azure Databricks cluster to drag hundreds of photos from world wide. Moreover, as soon as the photographs are downloaded, we will simply preprocess and manipulate them with instruments like OpenCV on Spark.

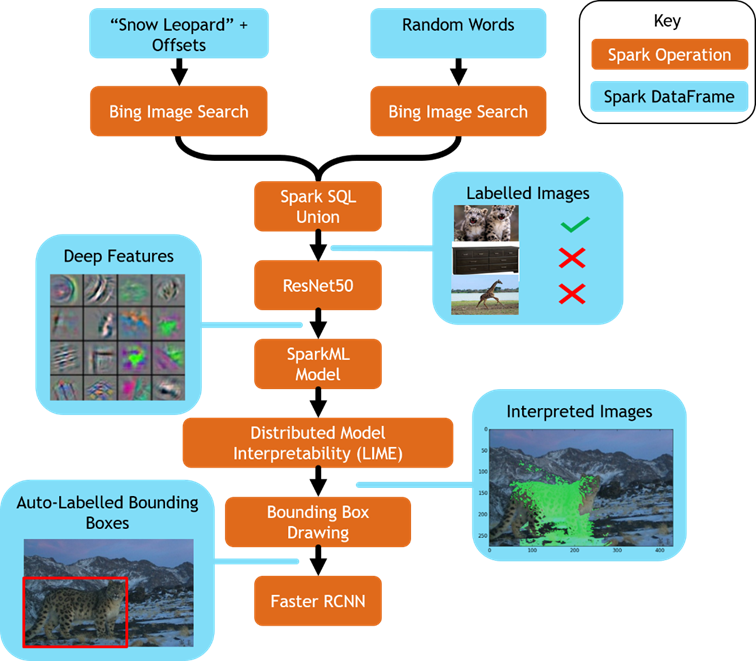

Determine 4: Diagram displaying the way to create a labelled dataset for snow leopard classification utilizing Bing on Spark.

Step 2: Making a Deep Studying Classifier

Now that we’ve a labelled dataset, we will start enthusiastic about our mannequin. Convolutional neural networks (CNNs) are as we speak’s state-of-the-art statistical fashions for picture evaluation. They seem in all the things from driverless vehicles, facial recognition methods, and picture serps. To construct our deep convolution community, we used MMLSpark, which supplies easy-to-use distributed deep studying with the Microsoft Cognitive Toolkit on Spark.

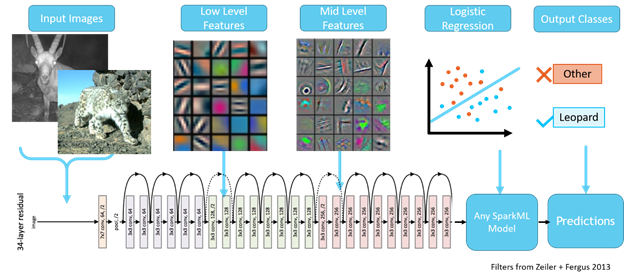

MMLSpark makes it particularly simple to carry out distributed switch studying, a deep studying method that mirrors how people study new duties. Once we study one thing new, like classifying snow leopards, we do not begin by re-wiring our total mind. As a substitute, we depend on a wealth of prior information gained over our lifetimes. We solely want a number of examples, and we shortly turn out to be excessive accuracy snow leopard detectors. Amazingly switch studying creates networks with related conduct. We start by utilizing a Deep Residual Community that has been skilled on tens of millions of generic photos. Subsequent, we reduce off a number of layers of this community and substitute them with a SparkML mannequin, like Logistic Regression, to study a ultimate mapping from deep options to snow leopard chances. Consequently, our mannequin leverages its earlier information within the type of clever options and may adapt itself to the duty at hand with the ultimate SparkML Mannequin. Determine 5 exhibits a schematic of this structure.

With MMLSpark, it is also simple so as to add enhancements to this fundamental structure like dataset augmentation, class balancing, quantile regression with LightGBM on Spark, and ensembling. To study extra, discover our journal paper on this work, or strive the instance on our web site.

It is vital to keep in mind that our algorithm can study from information sourced completely from Bing. It didn’t want hand labeled information, and this technique is relevant to virtually any area the place a picture search engine can discover your photos of curiosity.

Determine 5: A diagram of switch studying with ResNet50 on Spark.

Step 3: Creating an Object Detection Dataset with Distributed Mannequin Interpretability

At this level, we’ve proven the way to create a deep picture classification system that leverages Bing to eradicate the necessity for labelled information. Classification methods are extremely helpful for counting the variety of sightings. Nevertheless, classifiers inform us nothing about the place the leopard is within the picture, they solely return a likelihood {that a} leopard is in a picture. What would possibly appear to be a delicate distinction, can actually make a distinction in an finish to finish software. For instance, figuring out the place the leopard is will help people shortly decide whether or not the label is appropriate. It can be useful for conditions the place there is perhaps multiple leopard within the body. Most significantly for this work, to grasp what number of particular person leopards stay within the wild, we have to cross match particular person leopards throughout a number of cameras and areas. Step one on this course of is cropping the leopard images in order that we will use wildlife matching algorithms like HotSpotter.

Ordinarily, we would want labels to coach an object detector, aka painstakingly drawn bounding bins round every leopard picture. We might then prepare an object detection community study to breed these labels. Sadly, the photographs we pull from Bing don’t have any such bounding bins connected to them, making this activity appear unimaginable.

At this level we’re so shut, but thus far. We will create a system to find out whether or not a leopard is within the picture, however not the place the leopard is. Fortunately, our bag of machine studying tips is just not but empty. It will be preposterous if our deep community couldn’t find the leopard. How might something reliably know that there’s a snow leopard within the picture with out seeing it straight? Certain, the algorithm might deal with mixture picture statistics just like the background or the lighting to make an informed guess, however a great leopard detector ought to know a leopard when it sees it. If our mannequin understands and makes use of this data, the query is “How will we peer into our mannequin’s thoughts to extract this data?”.

Fortunately, Marco Tulio Ribeiro and a crew of researchers on the College of Washington have created an technique referred to as LIME (Native Interpretable Mannequin Agnostic Explanations), for explaining the classifications of any picture classifier. This technique permits us to ask our classifier a collection of questions, that when studied in mixture, will inform us the place the classifier is wanting. What’s most fun about this technique, is that it makes no assumptions concerning the type of mannequin beneath investigation. You possibly can clarify your individual deep community, a proprietary mannequin like these discovered within the Microsoft cognitive providers, or perhaps a (very affected person) human classifier. This makes it broadly relevant not simply throughout fashions, but additionally throughout domains.

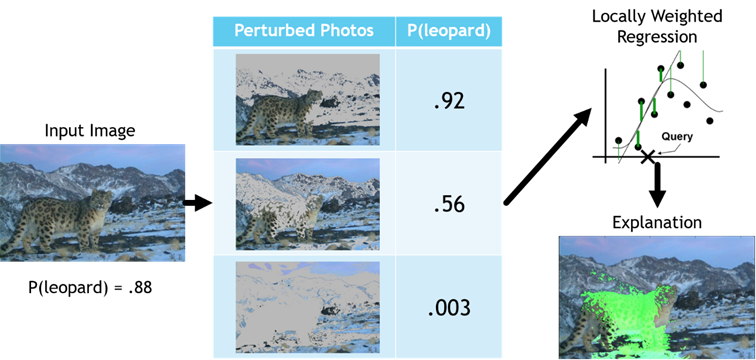

Determine 6: Diagram displaying the method for deciphering a picture classifier.

Determine 6 exhibits a visible illustration of the LIME course of. First, we’ll take our authentic picture, and break it into “interpretable parts” referred to as “superpixels”. Extra particularly, superpixels are clusters of pixels that teams pixels which have the same shade and placement collectively. We then take our authentic picture and randomly perturb it by “turning off” random superpixels. This ends in hundreds of latest photos which have elements of the leopard obscured. We will then feed these perturbed photos via our deep community to see how our perturbations have an effect on our classification chances. These fluctuations in mannequin chances assist level us to the superpixels of the picture which might be most vital for the classification. Extra formally, we will match a linear mannequin to a brand new dataset the place the inputs are binary vectors of superpixel on/off states, and the targets are the possibilities that the deep community outputs for every perturbed picture. The discovered linear mannequin weights then present us which superpixels are vital to our classifier. To extract a proof, we simply want to take a look at an important superpixels. In our evaluation, we use these which might be within the prime ~80% of superpixel importances.

LIME offers us a technique to peer into our mannequin and decide the precise pixels it’s leveraging to make its predictions. For our leopard classifier, these pixels usually straight spotlight the leopard within the body. This not solely offers us confidence in our mannequin, but additionally offering us with a technique to generate richer labels. LIME permits us to refine our classifications into bounding bins for object detection by drawing rectangles across the vital superpixels. From our experiments, the outcomes have been strikingly near what a human would draw across the leopard.

Determine 7: LIME pixels monitoring a leopard because it strikes via the mountains

Asserting: LIME on Spark

LIME has superb potential to assist customers perceive their fashions and even mechanically create object detection datasets. Nevertheless, LIME’s main downside is its steep computational price. To create an interpretation for only one picture, we have to pattern hundreds of perturbed photos, cross all of them via our community, after which prepare a linear mannequin on the outcomes. If it takes 1 hour to judge your mannequin on a dataset, then it might take not less than 50 days of computation to transform these predictions to interpretations. To assist make this course of possible for giant datasets, we’re releasing a distributed implementation of LIME as a part of MMLSpark. This can allow customers to shortly interpret any SparkML picture classifier, together with these backed by deep community frameworks like CNTK or TensorFlow. This helps make advanced workloads just like the one described, attainable in only some traces of MMLSpark code. If you want to strive the code, please see our instance pocket book for LIME on Spark.

Determine 8: Left: Define of most vital LIME superpixels. Proper: instance of human-labeled bounding field (blue) versus the LIME output bounding field (yellow)

Step 4: Transferring LIME’s Information right into a Deep Object Detector

By combining our deep classifier with LIME, we’ve created a dataset of leopard bounding bins. Moreover, we achieved this with out having to manually classify or labelling any photos with bounding bins. Bing Photos, Switch Studying, and LIME have achieved all of the laborious work for us. We will now use this labelled dataset to study a devoted deep object detector able to approximating LIME’s outputs at a 1000x speedup. Lastly, we will deploy this quick object detector as an internet service, cellphone app, or real-time streaming software for the Snow Leopard Belief to make use of.

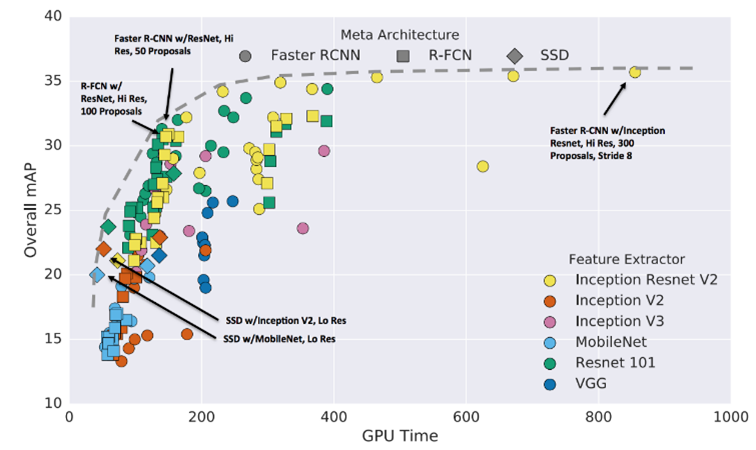

To construct our Object Detector, we used the TensorFlow Object Detection API. We once more used deep switch studying to fine-tune a pre-trained Sooner-RCNN object detector. This detector was pre-trained on the Microsoft Frequent Objects in Context (COCO) object detection dataset. Similar to switch studying for deep picture classifiers, working with an already clever object detector dramatically improves efficiency in comparison with studying from scratch. In our evaluation we optimized for accuracy, so we determined to make use of a Sooner R-CNN community with an Inception Resnet v2. Determine 9 exhibits speeds and performances of a number of community architectures, FRCNN + Inception Resnet V2 fashions are likely to cluster in the direction of the excessive accuracy aspect of the plot.

Determine 9: Pace/accuracy tradeoffs for contemporary convolutional object detectors. (Supply: Google Analysis.)

Outcomes

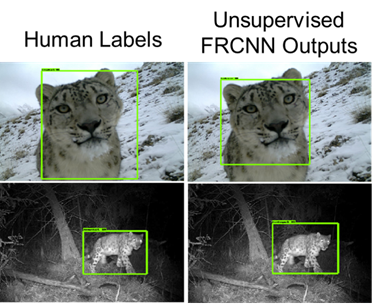

We discovered that Sooner R-CNN was in a position to reliably reproduce LIME’s outputs in a fraction of the time. Determine 10 exhibits a number of commonplace photos from the Snow Leopard Belief’s dataset. On these photos, Sooner R-CNN’s outputs straight seize the leopard within the body and match close to completely with human curated labels.

Determine 10: A comparability of human labeled photos (left) and the outputs of the ultimate skilled Sooner-RCNN on LIME predictions (proper).

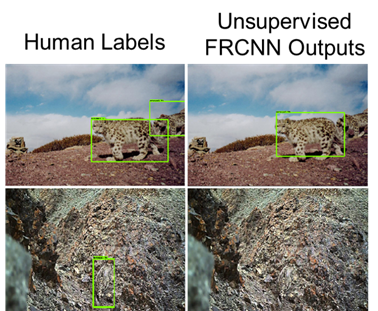

Determine 11: A comparability of inauspicious human labeled photos (left) and the outputs of the ultimate skilled Sooner-RCNN on LIME predictions (proper).

Nevertheless, some photos nonetheless pose challenges to this technique. In Determine 11, we look at a number of errors made by the article detector. Within the prime picture, there are two discernable leopards within the body, nonetheless Sooner R-CNN is simply in a position to detect the bigger leopard. That is as a result of technique used to transform LIME outputs to bounding bins. Extra particularly, we use a easy technique that bounds all chosen superpixels with a single rectangle. Consequently, our bounding field dataset has at most one field per picture. To refine this process, one might doubtlessly cluster the superpixels to determine if there are multiple object within the body, then draw the bounding bins. Moreover, some leopards are troublesome to identify attributable to their camouflage they usually slip by the detector. A part of this have an effect on is perhaps attributable to anthropic bias in Bing Search. Specifically, Bing Picture Search returns solely the clearest photos of leopards and these images are a lot simpler than your common digital camera lure picture. To mitigate this impact, one might have interaction in rounds of laborious unfavorable mining, increase the Bing information with laborious to see leopards, and upweight these examples which present troublesome to identify leopards.

Step 5: Deployment as a Net Service

The ultimate stage in our undertaking is to deploy our skilled object detector in order that the Snow Leopard belief can get mannequin predictions from wherever on this planet.

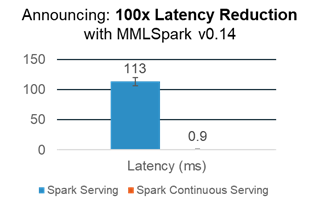

Asserting: Sub-millisecond Latency with Spark Serving

Right now we’re excited to announce a brand new platform for deploying Spark Computations as distributed net providers. This framework, referred to as Spark Serving, dramatically simplifies the serving course of in Python, Scala, Java and R. It provides ultra-low latency providers backed by a distributed and fault-tolerant Spark Cluster. Below the hood, Spark Serving takes care of spinning up and managing net providers on every node of your Spark cluster. As a part of the discharge of MMLSpark v0.14, Spark Serving noticed a 100-fold latency discount and may now deal with responses inside a single millisecond.

Determine 12: Spark Serving latency comparability.

We will use this framework to take our deep object detector skilled with Horovod on Spark, and deploy it with only some traces of code. To strive deploying a SparkML mannequin as an internet service for your self, please see our pocket book instance.

Future Work

The following step of this undertaking is to make use of this leopard detector to create a world database of particular person snow leopards and their sightings throughout areas. We plan to make use of a device referred to as HotSpotter to mechanically determine particular person leopards utilizing their uniquely patterned noticed fur. With this data, researchers on the Snow Leopard Belief can get a a lot better sense of leopard conduct, habitat, and motion. Moreover, figuring out particular person leopards helps researcher perceive inhabitants numbers, that are essential for justifying Snow Leopard protections.

Conclusion

By way of this undertaking we’ve seen how new open supply computing instruments such because the Cognitive Providers on Spark, Deep Switch Studying, Distributed Mannequin Interpretability, and the TensorFlow Object Detection API can work collectively to drag an area particular object detector straight from Bing. We have now additionally launched three new software program suites: The Cognitive Providers on Spark, Distributed Mannequin Interpretability, and Spark Serving, to make this evaluation easy and performant on Spark Clusters like Azure Databricks.

To recap, our evaluation consisted of the next most important steps:

- We gathered a classification dataset utilizing Bing on Spark.

- We skilled a deep classifier utilizing switch studying with CNTK on Spark.

- We Interpreted this deep classifier utilizing LIME on Spark to get areas of curiosity and bounding bins.

- We discovered a deep object detector utilizing switch studying that recreates LIME’s outputs at a fraction of the price.

- We deployed the mannequin as a distributed net service with Spark Serving.

Here’s a graphical illustration of this evaluation:

Determine 13: Overview of the total structure described on this weblog put up.

Utilizing Microsoft ML for Apache Spark, customers can simply observe in our footsteps and repeat this evaluation with their very own customized information or Bing queries. We have now printed this work within the open supply and invite others to strive it for themselves, give suggestions, and assist us advance the sector of distributed unsupervised studying.

We have now utilized this technique to assist defend and monitor the endangered snow leopard inhabitants, however we made no assumptions all through this weblog on the kind of information used. Inside a number of hours we have been in a position to modify this workflow to create a gasoline station fireplace detection community for Shell Vitality with related success.

Mark Hamilton, for the MMLSpark Crew

Assets: