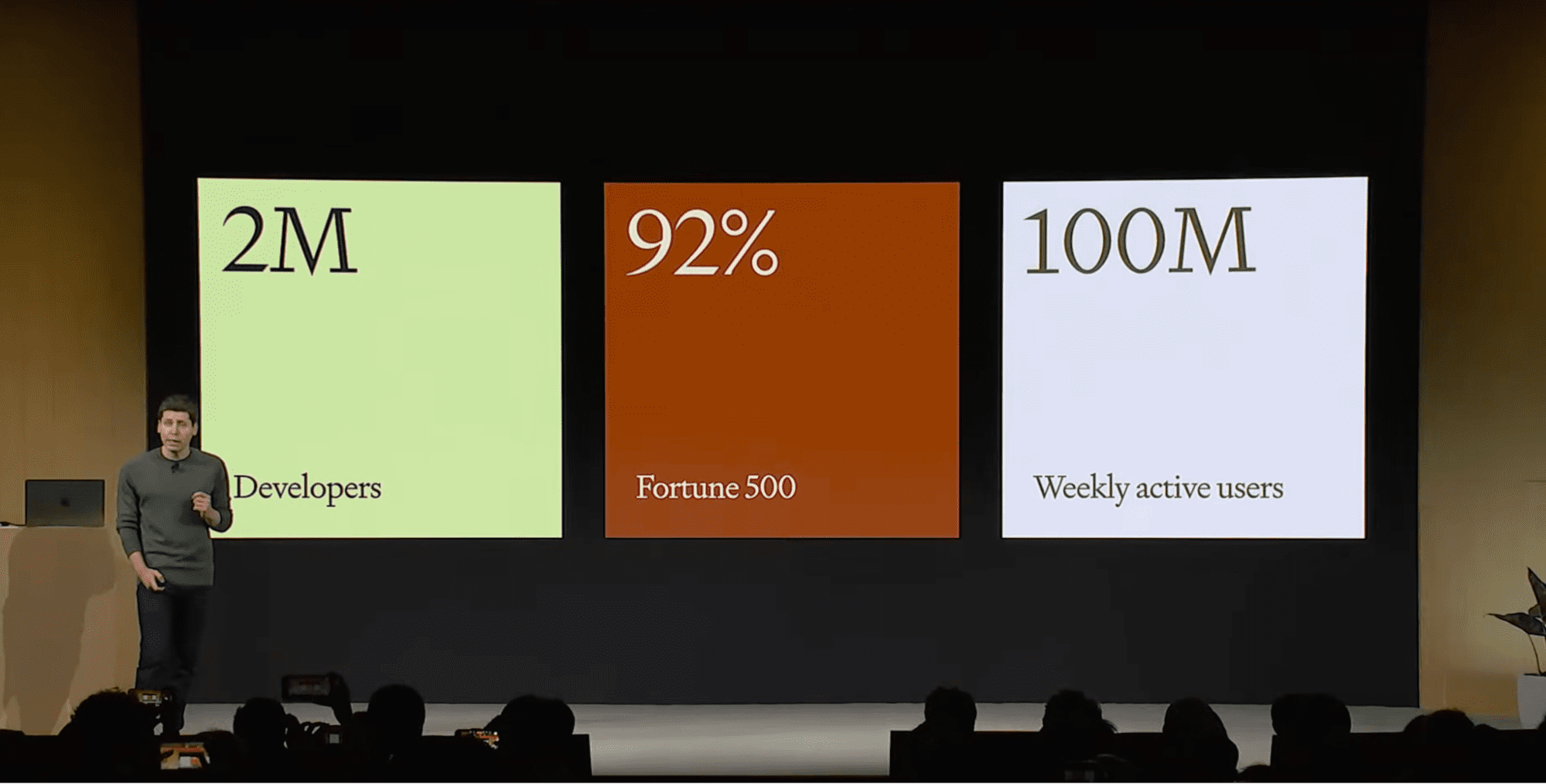

Sam Altman, OpenAI’s CEO, presents product utilization numbers on the OpenAI Developer Day in October 2023. OpenAI think about three buyer segments: builders, companies, and basic customers. hyperlink: https://www.youtube.com/watch?v=U9mJuUkhUzk&t=120s

On the OpenAI Developer Day in October 2023, Sam Altman, OpenAI’s CEO, confirmed a slide on product utilization throughout three totally different buyer segments: builders, companies, and basic customers.

On this article, we will concentrate on the developer phase. We’ll cowl what a generative AI developer does, what instruments it’s worthwhile to grasp for this job, and get began.

Whereas a number of corporations are devoted to creating generative AI merchandise, most generative AI builders are primarily based in different corporations the place this hasn’t been the normal focus.

The explanation for that is that generative AI has makes use of that apply to a variety of companies. 4 frequent makes use of of generative AI apply to most companies.

Chatbots

Picture Generated by DALL·E 3

Whereas chatbots have been mainstream for greater than a decade, nearly all of them have been terrible. Sometimes, the commonest first interplay with a chatbot is to ask it should you can converse to a human.

The advances in generative AI, notably giant language fashions and vector databases, imply that that’s now not true. Now that chatbots might be nice for purchasers to make use of, each firm is busy (or no less than needs to be busy) scrambling to improve them.

The article Affect of generative AI on chatbots from MIT Expertise Assessment has overview of how the world of chatbots is altering.

Semantic search

Search is utilized in all kinds of locations, from paperwork to procuring web sites to the web itself. Historically, serps make heavy use of key phrases, which creates the issue that the search engine must be programmed to pay attention to synonyms.

For instance, think about the case of making an attempt to go looking via a advertising report to search out the half on buyer segmentation. You press CMD+F, kind “segmentation”, and cycle via hits till you discover one thing. Sadly, you miss the instances the place the creator of the doc wrote “classification” as an alternative of “segmentation”.

Semantic search (looking on that means) solves this synonym downside by robotically discovering textual content with related meanings. The concept is that you simply use an embedding mannequin—a deep studying mannequin that converts textual content to a numeric vector in accordance with its that means—after which discovering associated textual content is simply easy linear algebra. Even higher, many embedding fashions enable different information varieties like photographs, audio, and video as inputs, letting you present totally different enter information varieties or output information varieties on your search.

As with chatbots, many corporations are attempting to enhance their web site search capabilities by making use of semantic search.

This tutorial on Semantic Search from Zillus, the maker of the Milvus vector database, supplies description of the use instances.

Personalised content material

Picture Generated by DALL·E 3

Generative AI makes content material creation cheaper. This makes it potential to create tailor-made content material for various teams of customers. Some frequent examples are altering the advertising copy or product descriptions relying on what you realize in regards to the consumer. You can even present localizations to make content material extra related for various international locations or demographics.

This text on obtain hyper-personalization utilizing generative AI platforms from Salesforce Chief Digital Evangelist Vala Afshar covers the advantages and challenges of utilizing generative AI to personalize content material.

Pure language interfaces to software program

As software program will get extra sophisticated and totally featured, the consumer interface will get bloated with menus, buttons, and instruments that customers cannot discover or determine use. Pure language interfaces, the place customers need to clarify what they need in a sentence, can dramatically enhance the useability of software program. “Pure language interface” can consult with both spoken or typed methods of controlling software program. The bottom line is that you should use commonplace human-understandable sentences.

Enterprise intelligence platforms are a few of the earlier adopters of this, with pure language interfaces serving to enterprise analysts write much less information manipulation code. The functions for this are pretty limitless, nevertheless: nearly each feature-rich piece of software program may benefit from a pure language interface.

This Forbes article on Embracing AI And Pure Language Interfaces from Gaurav Tewari, founder and Managing Associate of Omega Enterprise Companions, has an easy-to-read description of why pure language interfaces may also help software program usability.

Firstly, you want a generative AI mannequin! For working with textual content, this implies a big language mannequin. GPT 4.0 is the present gold commonplace for efficiency, however there are numerous open-source alternate options like Llama 2, Falcon, and Mistral.

Secondly, you want a vector database. Pinecone is the most well-liked business vector database, and there are some open-source alternate options like Milvus, Weaviate, and Chroma.

By way of programming language, the group appears to have settled round Python and JavaScript. JavaScript is necessary for net functions, and Python is appropriate for everybody else.

On prime of those, it’s useful to make use of a generative AI software framework. The 2 primary contenders are LangChain and LlamaIndex. LangChain is a broader framework that permits you to develop a variety of generative AI functions, and LlamaIndex is extra tightly targeted on growing semantic search functions.

In case you are making a search software, use LlamaIndex; in any other case, use LangChain.

It is price noting that the panorama is altering very quick, and plenty of new AI startups are showing each week, together with new instruments. If you wish to develop an software, count on to vary components of the software program stack extra incessantly than you’ll with different functions.

Particularly, new fashions are showing repeatedly, and one of the best performer on your use case is more likely to change. One frequent workflow is to begin utilizing APIs (for instance, the OpenAI API for the API and the Pinecone API for the vector database) since they’re fast to develop. As your userbase grows, the price of API calls can grow to be burdensome, so at this level, you could need to change to open-source instruments (the Hugging Face ecosystem is an effective alternative right here).

As with every new mission, begin easy! It is best to be taught one software at a time and later determine mix them.

Step one is to arrange accounts for any instruments you need to use. You will want developer accounts and API keys to utilize the platforms.

A Newbie’s Information to The OpenAI API: Palms-On Tutorial and Greatest Practices incorporates step-by-step directions on organising an OpenAI developer account and creating an API key.

Likewise, Mastering Vector Databases with Pinecone Tutorial: A Complete Information incorporates the main points for organising Pinecone.

What’s Hugging Face? The AI Group’s Open-Supply Oasis explains get began with Hugging Face.

Studying LLMs

To get began utilizing LLMs like GPT programmatically, the only factor is to learn to name the API to ship a immediate and obtain a message.

Whereas many duties might be achieved utilizing a single change backwards and forwards with the LLM, use instances like chatbots require an extended dialog. OpenAI not too long ago introduced a “threads” characteristic as a part of their Assistants API, which you’ll be able to find out about within the OpenAI Assistants API Tutorial.

This is not supported by each LLM, so you might also have to learn to manually handle the state of the dialog. For instance, it’s worthwhile to resolve which of the earlier messages within the dialog are nonetheless related to the present dialog.

Past this, there is not any have to cease when solely working with textual content. You possibly can strive working with different media; for instance, transcribing audio (speech to textual content) or producing photographs from textual content.

Studying vector databases

The only use case of vector databases is semantic search. Right here, you utilize an embedding mannequin (see Introduction to Textual content Embeddings with the OpenAI API) that converts the textual content (or different enter) right into a numeric vector that represents its that means.

You then insert your embedded information (the numeric vectors) into the vector database. Looking simply means writing a search question, and asking which entries within the database correspond most intently to the factor you requested for.

For instance, you may take some FAQs on one among your organization’s merchandise, embed them, and add them right into a vector database. Then, you ask a query in regards to the product, and it’ll return the closest matches, changing again from a numeric vector to the unique textual content.

Combining LLMs and vector databases

You might discover that instantly returning the textual content entry from the vector database is not sufficient. Usually, you need the textual content to be processed in a manner that solutions the question extra naturally.

The answer to it is a method often called retrieval augmented technology (RAG). Because of this after you retrieve your textual content from the vector database, you write a immediate for an LLM, then embrace the retrieved textual content in your immediate (you increase the immediate with the retrieved textual content). Then, you ask the LLM to write down a human-readable reply.

Within the instance of answering consumer questions from FAQs, you’d write a immediate with placeholders, like the next.

"""

Please reply the consumer's query about {product}.

---

The consumer's query is : {question}

---

The reply might be discovered within the following textual content: {retrieved_faq}

"""

The ultimate step is to mix your RAG abilities with the power to handle message threads to carry an extended dialog. Voila! You have got a chatbot!

DataCamp has a collection of 9 code-alongs to show you to grow to be a generative AI developer. You want primary Python abilities to get began, however all of the AI ideas are taught from scratch.

The collection is taught by prime instructors from Microsoft, Pinecone, Imperial Faculty London, and Constancy (and me!).

You will find out about all of the matters lined on this article, with six code-alongs targeted on the business stack of the OpenAI API, the Pinecone API, and LangChain. The opposite three tutorials are targeted on Hugging Face fashions.

By the top of the collection, you can create a chatbot and construct NLP and laptop imaginative and prescient functions.

Richie Cotton is a Knowledge Evangelist at DataCamp. He’s the host of the DataFramed podcast, he is written 2 books on R programming, and created 10 DataCamp programs on information science which were taken by over 700k learners.