Word: AI instruments are used as an assistant on this publish!

Let’s proceed with the parts.

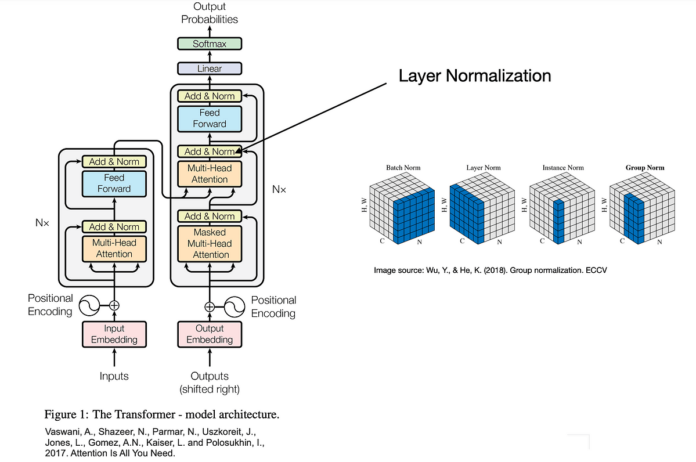

Add & Norm

After the multi-head consideration block within the encoder and likewise in another elements of the transformer structure, we now have a block known as Add&Norm. The Add a part of this block is principally a residual block, just like ResNet, which is principally including the enter of a block to the output of that block: x+layer(x) .

Normalization is a method utilized in deep studying fashions to stabilize the educational course of and scale back the variety of coaching epochs wanted to coach deep networks. Within the Transformer structure, a selected kind of normalization, known as Layer Normalization, is used.

Layer Normalization (LN) is utilized over the past dimension (the function dimension) in distinction to Batch Normalization which is utilized over the primary dimension (the batch dimension). Principally, we do Layer Normalization throughout the final dimension (which is the dimension of the options, or ‘channels’, or ‘heads’ in multi-head consideration). Which means every function within the function vector has its personal imply and variance computed for normalization, and that is carried out for every place individually.

In Batch Normalization, you calculate imply and variance in your normalization throughout the batch dimension, so that you normalize your function to have the identical distribution for every instance in a batch.

In Layer Normalization, you normalize throughout the function dimension (or channels, or heads), and this normalization shouldn’t be depending on different examples within the batch. It’s computed independently for every instance, therefore it’s extra fitted to duties the place the batch dimension will be variable (like in sequence-to-sequence duties, akin to translation, summarization and so forth.).

Not like Batch Normalization, Layer Normalization performs precisely the identical computation at coaching and take a look at occasions. It’s not depending on the batch of examples, and it has no impact on the illustration capacity of the community.

Within the Transformer mannequin, Layer Normalization is utilized within the following areas:

1. After every sub-layer (Self-Consideration or Feed-Ahead): Every sub-layer (both a multi-head self-attention mechanism or a position-wise absolutely related feed-forward community) within the…