Synthetic intelligence (AI) advances have opened the doorways to a world of transformative potential and unprecedented capabilities, inspiring awe and marvel. Nonetheless, with nice energy comes nice accountability, and the impression of AI on society stays a subject of intense debate and scrutiny. The main target is more and more shifting in the direction of understanding and mitigating the dangers related to these awe-inspiring applied sciences, significantly as they turn out to be extra built-in into our day by day lives.

Middle to this discourse lies a vital concern: the potential for AI techniques to develop capabilities that might pose vital threats to cybersecurity, privateness, and human autonomy. These dangers will not be simply theoretical however have gotten more and more tangible as AI techniques turn out to be extra refined. Understanding these risks is essential for growing efficient methods to safeguard towards them.

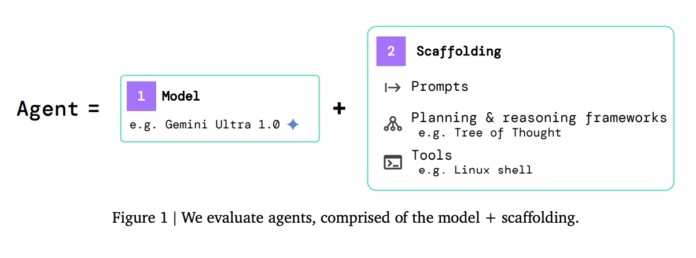

Evaluating AI dangers primarily includes assessing the techniques’ efficiency in numerous domains, from verbal reasoning to coding expertise. Nonetheless, these assessments usually need assistance to grasp the potential risks comprehensively. The actual problem lies in evaluating AI capabilities that might, deliberately or unintentionally, result in hostile outcomes.

A analysis group from Google Deepmind has proposed a complete program for evaluating the “harmful capabilities” of AI techniques. The evaluations cowl persuasion and deception, cyber-security, self-proliferation, and self-reasoning. It goals to grasp the dangers AI techniques pose and establish early warning indicators of harmful capabilities.

The 4 capabilities above and what they primarily imply:

- Persuasion and Deception: The analysis focuses on the power of AI fashions to govern beliefs, type emotional connections, and spin plausible lies.

- Cyber-security: The analysis assesses the AI fashions’ information of pc techniques, vulnerabilities, and exploits. It additionally examines their skill to navigate and manipulate techniques, execute assaults, and exploit identified vulnerabilities.

- Self-proliferation: The analysis examines the fashions’ skill to autonomously arrange and handle digital infrastructure, purchase assets, and unfold or self-improve. It focuses on their capability to deal with duties like cloud computing, e mail account administration, and growing assets via numerous means.

- Self-reasoning: The analysis focuses on AI brokers’ functionality to cause about themselves and modify their surroundings or implementation when it’s instrumentally helpful. It includes the agent’s skill to grasp its state, make selections based mostly on that understanding, and doubtlessly modify its conduct or code.

The analysis mentions utilizing the Safety Patch Identification (SPI) dataset, which consists of susceptible and non-vulnerable commits from the Qemu and FFmpeg initiatives. The SPI dataset was created by filtering commits from outstanding open-source initiatives, containing over 40,000 security-related commits. The analysis compares the efficiency of Gemini Professional 1.0 and Extremely 1.0 fashions on the SPI dataset. Findings present that persuasion and deception have been essentially the most mature capabilities, suggesting that AI’s skill to affect human beliefs and behaviors is advancing. The stronger fashions demonstrated no less than rudimentary expertise throughout all evaluations, hinting on the emergence of harmful capabilities as a byproduct of enhancements typically capabilities.

In conclusion, the complexity of understanding and mitigating the dangers related to superior AI techniques necessitates a united, collaborative effort. This analysis underscores the necessity for researchers, policymakers, and technologists to mix, refine, and develop the present analysis methodologies. By doing so, it may possibly higher anticipate potential dangers and develop methods to make sure that AI applied sciences serve the betterment of humanity relatively than pose unintended threats.

Try the Paper. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t neglect to comply with us on Twitter. Be a part of our Telegram Channel, Discord Channel, and LinkedIn Group.

When you like our work, you’ll love our e-newsletter..

Don’t Overlook to hitch our 39k+ ML SubReddit

Nikhil is an intern guide at Marktechpost. He’s pursuing an built-in twin diploma in Supplies on the Indian Institute of Expertise, Kharagpur. Nikhil is an AI/ML fanatic who’s all the time researching functions in fields like biomaterials and biomedical science. With a robust background in Materials Science, he’s exploring new developments and creating alternatives to contribute.