These days, no person might be shocked by working a deep studying mannequin within the cloud. However the scenario may be far more difficult within the edge or shopper machine world. There are a number of causes for that. First, the usage of cloud APIs requires units to all the time be on-line. This isn’t an issue for an internet service however could be a dealbreaker for the machine that must be practical with out Web entry. Second, cloud APIs value cash, and prospects doubtless is not going to be completely satisfied to pay yet one more subscription price. Final however not least, after a number of years, the undertaking could also be completed, API endpoints might be shut down, and the costly {hardware} will flip right into a brick. Which is of course not pleasant for purchasers, the ecosystem, and the setting. That’s why I’m satisfied that the end-user {hardware} must be totally practical offline, with out additional prices or utilizing the web APIs (effectively, it may be elective however not necessary).

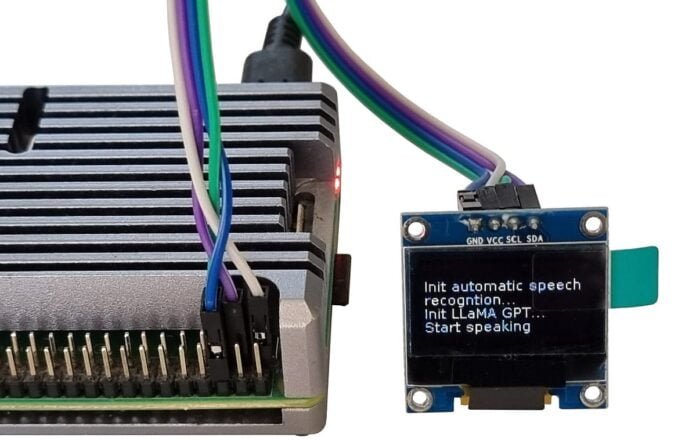

On this article, I’ll present how one can run a LLaMA GPT mannequin and automated speech recognition (ASR) on a Raspberry Pi. That can enable us to ask Raspberry Pi questions and get solutions. And as promised, all this can work totally offline.

Let’s get into it!

The code introduced on this article is meant to work on the Raspberry Pi. However a lot of the strategies (besides the “show” half) may also work on a Home windows, OSX, or Linux laptop computer. So, these readers who don’t have a Raspberry Pi can simply take a look at the code with none issues.

{Hardware}

For this undertaking, I might be utilizing a Raspberry Pi 4. It’s a single-board laptop working Linux; it’s small and requires solely 5V DC energy with out followers and energetic cooling:

A more recent 2023 mannequin, the Raspberry Pi 5, must be even higher; in line with benchmarks, it’s nearly 2x quicker. However it is usually nearly 50% dearer, and for our take a look at, the mannequin 4 is nice sufficient.