Writer: Vitalik Buterin through the Vitalik Buterin Weblog

Particular because of the Worldcoin and Modulus Labs groups, Xinyuan Solar, Martin Koeppelmann and Illia Polosukhin for suggestions and dialogue.

Many individuals over time have requested me an analogous query: what are the intersections between crypto and AI that I contemplate to be probably the most fruitful? It’s an affordable query: crypto and AI are the 2 foremost deep (software program) know-how traits of the previous decade, and it simply appears like there should be some type of connection between the 2. It’s straightforward to provide you with synergies at a superficial vibe degree: crypto decentralization can stability out AI centralization, AI is opaque and crypto brings transparency, AI wants information and blockchains are good for storing and monitoring information. However over time, when individuals would ask me to dig a degree deeper and discuss particular functions, my response has been a disappointing one: “yeah there’s a number of issues however not that a lot”.

Within the final three years, with the rise of rather more highly effective AI within the type of trendy LLMs, and the rise of rather more highly effective crypto within the type of not simply blockchain scaling options but additionally ZKPs, FHE, (two-party and N-party) MPC, I’m beginning to see this alteration. There are certainly some promising functions of AI within blockchain ecosystems, or AI along with cryptography, although it is very important watch out about how the AI is utilized. A specific problem is: in cryptography, open supply is the one technique to make one thing actually safe, however in AI, a mannequin (and even its coaching information) being open significantly will increase its vulnerability to adversarial machine studying assaults. This put up will undergo a classification of various ways in which crypto + AI may intersect, and the prospects and challenges of every class.

A high-level abstract of crypto+AI intersections from a uETH weblog put up. However what does it take to really notice any of those synergies in a concrete utility?

The 4 main classes

AI is a really broad idea: you may consider “AI” as being the set of algorithms that you just create not by specifying them explicitly, however quite by stirring a giant computational soup and placing in some type of optimization strain that nudges the soup towards producing algorithms with the properties that you really want. This description ought to positively not be taken dismissively: it consists of the course of that created us people within the first place! However it does imply that AI algorithms have some widespread properties: their potential to do issues which can be extraordinarily highly effective, along with limits in our potential to know or perceive what’s happening below the hood.

There are a lot of methods to categorize AI; for the needs of this put up, which talks about interactions between AI and blockchains (which have been described as a platform for creating “video games”), I’ll categorize it as follows:

- AI as a participant in a recreation [highest viability]: AIs collaborating in mechanisms the place the last word supply of the incentives comes from a protocol with human inputs.

- AI as an interface to the sport [high potential, but with risks]: AIs serving to customers to grasp the crypto world round them, and to make sure that their conduct (ie. signed messages and transactions) matches their intentions and they don’t get tricked or scammed.

- AI as the foundations of the sport [tread very carefully]: blockchains, DAOs and related mechanisms instantly calling into AIs. Suppose eg. “AI judges”

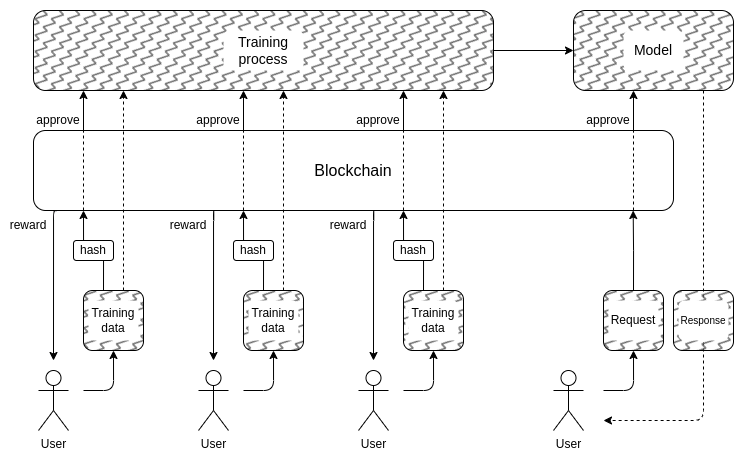

- AI as the target of the sport [longer-term but intriguing]: designing blockchains, DAOs and related mechanisms with the objective of setting up and sustaining an AI that could possibly be used for different functions, utilizing the crypto bits both to higher incentivize coaching or to stop the AI from leaking privacte information or being misused.

Allow us to undergo these one after the other.

AI as a participant in a recreation

That is really a class that has existed for almost a decade, at the very least since on-chain decentralized exchanges (DEXes) began to see vital use. Any time there may be an alternate, there is a chance to earn cash by means of arbitrage, and bots can do arbitrage significantly better than people can. This use case has existed for a very long time, even with a lot less complicated AIs than what we’ve got in the present day, however in the end it’s a very actual AI + crypto intersection. Extra lately, we’ve got seen MEV arbitrage bots typically exploiting one another. Any time you’ve gotten a blockchain utility that entails auctions or buying and selling, you will have arbitrage bots.

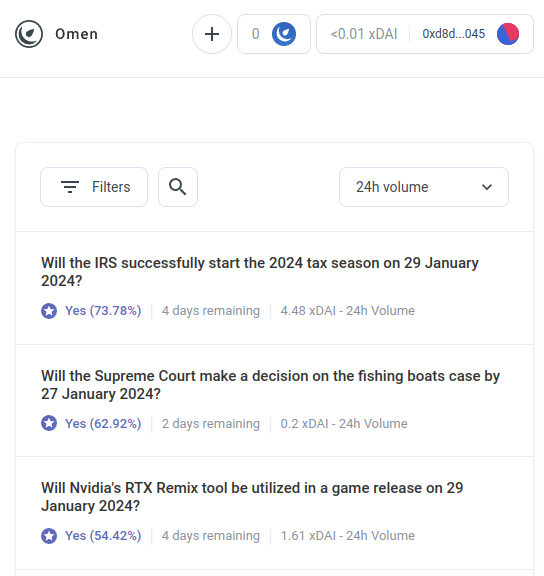

However AI arbitrage bots are solely the primary instance of a a lot greater class, which I anticipate will quickly begin to embrace many different functions. Meet AIOmen, a demo of a prediction market the place AIs are gamers:

One response to that is to level to ongoing UX enhancements in Polymarket or different new prediction markets, and hope that they may succeed the place earlier iterations have failed. In spite of everything, the story goes, persons are prepared to wager tens of billions on sports activities, so why wouldn’t individuals throw in sufficient cash betting on US elections or LK99 that it begins to make sense for the intense gamers to begin coming in? However this argument should cope with the truth that, properly, earlier iterations have did not get to this degree of scale (at the very least in comparison with their proponents’ desires), and so it looks as if you want one thing new to make prediction markets succeed. And so a unique response is to level to 1 particular function of prediction market ecosystems that we will anticipate to see within the 2020s that we didn’t see within the 2010s: the potential for ubiquitous participation by AIs.

AIs are prepared to work for lower than $1 per hour, and have the information of an encyclopedia – and if that’s not sufficient, they’ll even be built-in with real-time internet search functionality. In case you make a market, and put up a liquidity subsidy of $50, people won’t care sufficient to bid, however 1000’s of AIs will simply swarm all around the query and make the very best guess they’ll. The inducement to do a great job on anybody query could also be tiny, however the incentive to make an AI that makes good predictions typically could also be within the tens of millions. Observe that probably, you don’t even want the people to adjudicate most questions: you should utilize a multi-round dispute system much like Augur or Kleros, the place AIs would even be those collaborating in earlier rounds. People would solely want to reply in these few instances the place a sequence of escalations have taken place and enormous quantities of cash have been dedicated by either side.

This can be a highly effective primitive, as a result of as soon as a “prediction market” may be made to work on such a microscopic scale, you may reuse the “prediction market” primitive for a lot of other forms of questions:

- Is that this social media put up acceptable below [terms of use]?

- What is going to occur to the worth of inventory X (eg. see Numerai)

- Is that this account that’s at present messaging me really Elon Musk?

- Is that this work submission on an internet activity market acceptable?

- Is the dapp at https://examplefinance.community a rip-off?

- Is

0x1b54....98c3really the handle of the “Casinu Inu” ERC20 token?

Chances are you’ll discover that loads of these concepts go within the course of what I referred to as “data protection” in . Broadly outlined, the query is: how can we assist customers inform aside true and false info and detect scams, with out empowering a centralized authority to determine proper and flawed who may then abuse that place? At a micro degree, the reply may be “AI”. However at a macro degree, the query is: who builds the AI? AI is a mirrored image of the method that created it, and so can’t keep away from having biases. Therefore, there’s a want for a higher-level recreation which adjudicates how properly the totally different AIs are doing, the place AIs can take part as gamers within the recreation.

This utilization of AI, the place AIs take part in a mechanism the place they get in the end rewarded or penalized (probabilistically) by an on-chain mechanism that gathers inputs from people (name it decentralized market-based RLHF?), is one thing that I believe is de facto value trying into. Now’s the correct time to look into use instances like this extra, as a result of blockchain scaling is lastly succeeding, making “micro-” something lastly viable on-chain when it was typically not earlier than.

A associated class of functions goes within the course of extremely autonomous brokers utilizing blockchains to higher cooperate, whether or not by means of funds or by means of utilizing good contracts to make credible commitments.

AI as an interface to the sport

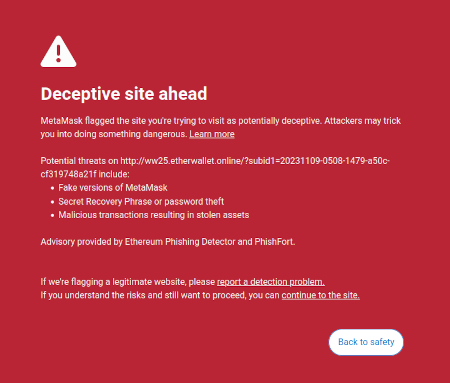

One concept that I introduced up in my writings on is the concept that there’s a market alternative to write down user-facing software program that will defend customers’ pursuits by decoding and figuring out risks within the on-line world that the person is navigating. One already-existing instance of that is Metamask’s rip-off detection function:

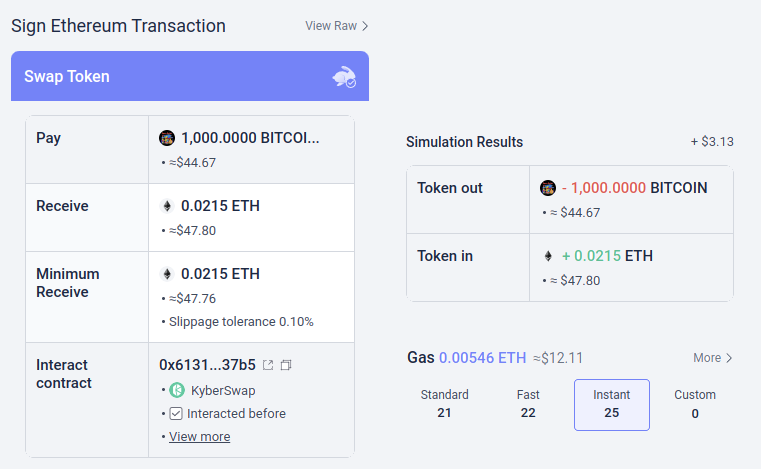

Probably, these sorts of instruments could possibly be super-charged with AI. AI may give a a lot richer human-friendly clarification of what sort of dapp you’re collaborating in, the implications of extra difficult operations that you’re signing, whether or not or not a selected token is real (eg. BITCOIN isn’t just a string of characters, it’s the identify of an precise cryptocurrency, which isn’t an ERC20 token and which has a value waaaay increased than $0.045, and a contemporary LLM would know that), and so forth. There are tasks beginning to go all the best way out on this course (eg. the LangChain pockets, which makes use of AI as a main interface). My very own opinion is that pure AI interfaces are in all probability too dangerous in the intervening time because it will increase the danger of different sorts of errors, however AI complementing a extra typical interface is getting very viable.

There may be one specific danger value mentioning. I’ll get into this extra within the part on “AI as guidelines of the sport” under, however the final challenge is adversarial machine studying: if a person has entry to an AI assistant inside an open-source pockets, the unhealthy guys could have entry to that AI assistant too, and they also could have limitless alternative to optimize their scams to not set off that pockets’s defenses. All trendy AIs have bugs someplace, and it’s not too onerous for a coaching course of, even one with solely restricted entry to the mannequin, to seek out them.

That is the place “AIs collaborating in on-chain micro-markets” works higher: every particular person AI is weak to the identical dangers, however you’re deliberately creating an open ecosystem of dozens of individuals continuously iterating and bettering them on an ongoing foundation. Moreover, every particular person AI is closed: the safety of the system comes from the openness of the foundations of the recreation, not the interior workings of every participant.

Abstract: AI may also help customers perceive what’s happening in plain language, it might probably function a real-time tutor, it might probably defend customers from errors, however be warned when making an attempt to make use of it instantly in opposition to malicious misinformers and scammers.

AI as the foundations of the sport

Now, we get to the applying that lots of people are enthusiastic about, however that I believe is probably the most dangerous, and the place we have to tread probably the most rigorously: what I name AIs being a part of the foundations of the sport. This ties into pleasure amongst mainstream political elites about “AI judges” (eg. see this text on the web site of the “World Authorities Summit”), and there are analogs of those wishes in blockchain functions. If a blockchain-based good contract or a DAO must make a subjective resolution (eg. is a selected work product acceptable in a work-for-hire contract? Which is the correct interpretation of a natural-language structure just like the Optimism Legislation of Chains?), may you make an AI merely be a part of the contract or DAO to assist implement these guidelines?

That is the place adversarial machine studying goes to be a particularly robust problem. The fundamental two-sentence argument why is as follows:

If an AI mannequin that performs a key position in a mechanism is closed, you may’t confirm its internal workings, and so it’s no higher than a centralized utility. If the AI mannequin is open, then an attacker can obtain and simulate it domestically, and design closely optimized assaults to trick the mannequin, which they’ll then replay on the reside community.

Now, frequent readers of this weblog (or denizens of the cryptoverse) may be getting forward of me already, and considering: however wait! We’ve got fancy zero information proofs and different actually cool types of cryptography. Certainly we will do some crypto-magic, and conceal the internal workings of the mannequin in order that attackers can’t optimize assaults, however on the identical time show that the mannequin is being executed appropriately, and was constructed utilizing an affordable coaching course of on an affordable set of underlying information!

Usually, that is precisely the kind of considering that I advocate each on this weblog and in my different writings. However within the case of AI-related computation, there are two main objections:

- Cryptographic overhead: it’s a lot much less environment friendly to do one thing inside a SNARK (or MPC or…) than it’s to do it “within the clear”. On condition that AI may be very computationally-intensive already, is doing AI within cryptographic black packing containers even computationally viable?

- Black-box adversarial machine studying assaults: there are methods to optimize assaults in opposition to AI fashions even with out realizing a lot in regards to the mannequin’s inner workings. And in the event you cover an excessive amount of, you danger making it too straightforward for whoever chooses the coaching information to deprave the mannequin with poisoning assaults.

Each of those are difficult rabbit holes, so allow us to get into every of them in flip.

Cryptographic overhead

Cryptographic devices, particularly general-purpose ones like ZK-SNARKs and MPC, have a excessive overhead. An Ethereum block takes a number of hundred milliseconds for a consumer to confirm instantly, however producing a ZK-SNARK to show the correctness of such a block can take hours. The everyday overhead of different cryptographic devices, like MPC, may be even worse. AI computation is pricey already: probably the most highly effective LLMs can output particular person phrases solely just a little bit sooner than human beings can learn them, to not point out the usually multimillion-dollar computational prices of coaching the fashions. The distinction in high quality between top-tier fashions and the fashions that attempt to economize rather more on coaching value or parameter depend is massive. At first look, it is a superb purpose to be suspicious of the entire undertaking of making an attempt so as to add ensures to AI by wrapping it in cryptography.

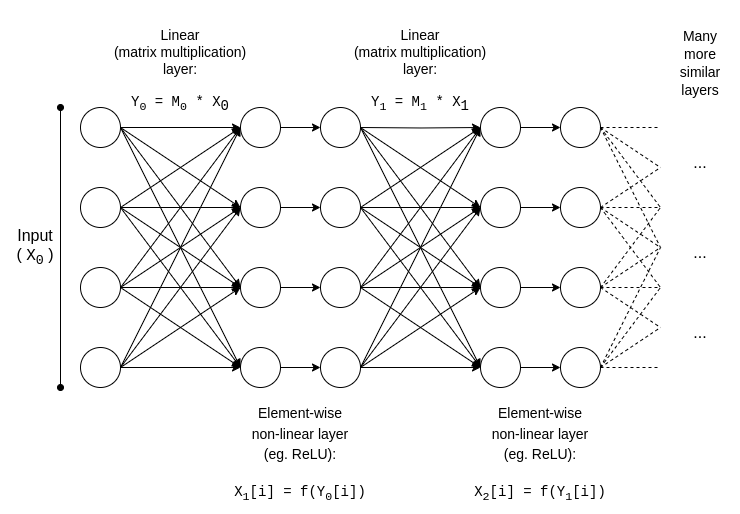

Fortuitously, although, AI is a very particular sort of computation, which makes it amenable to all types of optimizations that extra “unstructured” forms of computation like ZK-EVMs can’t profit from. Allow us to look at the essential construction of an AI mannequin:

y = max(x, 0)). Asymptotically, matrix multiplications take up many of the work: multiplying two N*N matrices takes �(�2.8) time, whereas the variety of non-linear operations is far smaller. That is actually handy for cryptography, as a result of many types of cryptography can do linear operations (which matrix multiplications are, at the very least in the event you encrypt the mannequin however not the inputs to it) nearly “free of charge”.If you’re a cryptographer, you’ve in all probability already heard of an analogous phenomenon within the context of homomorphic encryption: performing additions on encrypted ciphertexts is very easy, however multiplications are extremely onerous and we didn’t work out any method of doing it in any respect with limitless depth till 2009.

For ZK-SNARKs, the equal is protocols like this one from 2013, which present a lower than 4x overhead on proving matrix multiplications. Sadly, the overhead on the non-linear layers nonetheless finally ends up being vital, and the very best implementations in follow present overhead of round 200x. However there may be hope that this may be significantly decreased by means of additional analysis; see this presentation from Ryan Cao for a current strategy primarily based on GKR, and my very own simplified clarification of how the primary element of GKR works.

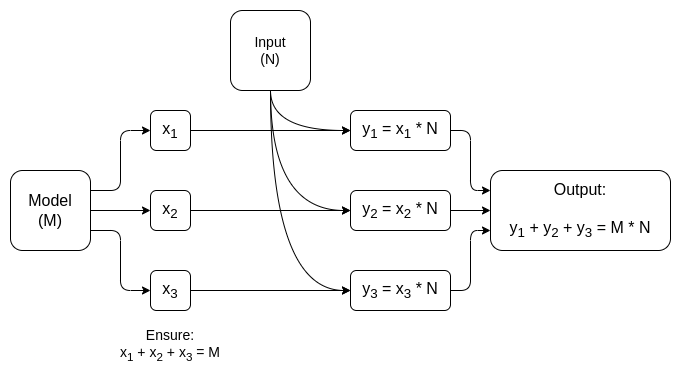

However for a lot of functions, we don’t simply wish to show that an AI output was computed appropriately, we additionally wish to cover the mannequin. There are naive approaches to this: you may cut up up the mannequin so {that a} totally different set of servers redundantly retailer every layer, and hope that a number of the servers leaking a number of the layers doesn’t leak an excessive amount of information. However there are additionally surprisingly efficient types of specialised multi-party computation.

In each instances, the ethical of the story is similar: the best a part of an AI computation is matrix multiplications, for which it’s attainable to make very environment friendly ZK-SNARKs or MPCs (and even FHE), and so the overall overhead of placing AI inside cryptographic packing containers is surprisingly low. Usually, it’s the non-linear layers which can be the best bottleneck regardless of their smaller measurement; maybe newer methods like lookup arguments may also help.

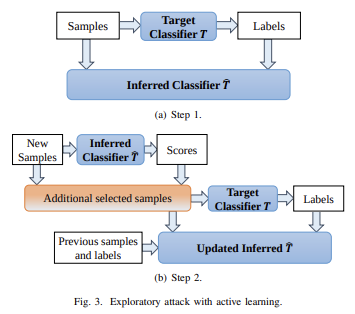

Black-box adversarial machine studying

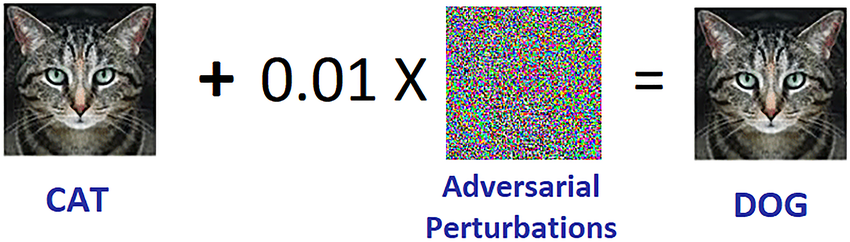

Now, allow us to get to the opposite massive downside: the sorts of assaults that you are able to do even when the contents of the mannequin are stored non-public and also you solely have “API entry” to the mannequin. Quoting a paper from 2016:

Many machine studying fashions are weak to adversarial examples: inputs which can be specifically crafted to trigger a machine studying mannequin to supply an incorrect output. Adversarial examples that have an effect on one mannequin typically have an effect on one other mannequin, even when the 2 fashions have totally different architectures or had been educated on totally different coaching units, as long as each fashions had been educated to carry out the identical activity. An attacker could due to this fact practice their very own substitute mannequin, craft adversarial examples in opposition to the substitute, and switch them to a sufferer mannequin, with little or no details about the sufferer.

Probably, you may even create assaults realizing simply the coaching information, even when you have very restricted or no entry to the mannequin that you’re making an attempt to assault. As of 2023, these sorts of assaults proceed to be a big downside.

To successfully curtail these sorts of black-box assaults, we have to do two issues:

- Actually restrict who or what can question the mannequin and the way a lot. Black packing containers with unrestricted API entry usually are not safe; black packing containers with very restricted API entry could also be.

- Disguise the coaching information, whereas preserving confidence that the method used to create the coaching information shouldn’t be corrupted.

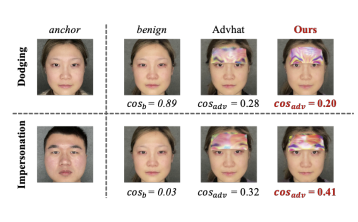

The undertaking that has finished probably the most on the previous is maybe Worldcoin, of which I analyze an earlier model (amongst different protocols) at size right here. Worldcoin makes use of AI fashions extensively at protocol degree, to (i) convert iris scans into quick “iris codes” which can be straightforward to check for similarity, and (ii) confirm that the factor it’s scanning is definitely a human being. The primary protection that Worldcoin is counting on is the truth that it’s not letting anybody merely name into the AI mannequin: quite, it’s utilizing trusted {hardware} to make sure that the mannequin solely accepts inputs digitally signed by the orb’s digicam.

This strategy shouldn’t be assured to work: it seems which you could make adversarial assaults in opposition to biometric AI that come within the type of bodily patches or jewellery which you could put in your face:

However the hope is that in the event you mix all of the defenses collectively, hiding the AI mannequin itself, significantly limiting the variety of queries, and requiring every question to in some way be authenticated, you may adversarial assaults troublesome sufficient that the system could possibly be safe.

And this will get us to the second half: how can we cover the coaching information? That is the place “DAOs to democratically govern AI” may really make sense: we will create an on-chain DAO that governs the method of who’s allowed to submit coaching information (and what attestations are required on the information itself), who’s allowed to make queries, and what number of, and use cryptographic methods like MPC to encrypt your entire pipeline of making and operating the AI from every particular person person’s coaching enter all the best way to the ultimate output of every question. This DAO may concurrently fulfill the extremely in style goal of compensating individuals for submitting information.

- Cryptographic overhead may nonetheless prove too excessive for this sort of fully-black-box structure to be aggressive with conventional closed “belief me” approaches.

- It may prove that there isn’t a great way to make the coaching information submission course of decentralized and protected in opposition to poisoning assaults.

- Multi-party computation devices may break their security or privateness ensures resulting from contributors colluding: in spite of everything, this has occurred with cross-chain cryptocurrency bridges once more and once more.

One purpose why I didn’t begin this part with extra massive pink warning labels saying “DON’T DO AI JUDGES, THAT’S DYSTOPIAN”, is that our society is very depending on unaccountable centralized AI judges already: the algorithms that decide which sorts of posts and political views get boosted and deboosted, and even censored, on social media. I do assume that increasing this development additional at this stage is kind of a nasty concept, however I don’t assume there’s a massive likelihood that the blockchain neighborhood experimenting with AIs extra would be the factor that contributes to creating it worse.

In truth, there are some fairly primary low-risk ways in which crypto know-how could make even these current centralized methods higher that I’m fairly assured in. One easy method is verified AI with delayed publication: when a social media web site makes an AI-based rating of posts, it may publish a ZK-SNARK proving the hash of the mannequin that generated that rating. The location may decide to revealing its AI fashions after eg. a one yr delay. As soon as a mannequin is revealed, customers may verify the hash to confirm that the right mannequin was launched, and the neighborhood may run assessments on the mannequin to confirm its equity. The publication delay would be sure that by the point the mannequin is revealed, it’s already outdated.

So in comparison with the centralized world, the query shouldn’t be if we will do higher, however by how a lot. For the decentralized world, nevertheless, it is very important watch out: if somebody builds eg. a prediction market or a stablecoin that makes use of an AI oracle, and it seems that the oracle is attackable, that’s an enormous sum of money that might disappear right away.

AI as the target of the sport

If the above methods for making a scalable decentralized non-public AI, whose contents are a black field not recognized by anybody, can really work, then this is also used to create AIs with utility going past blockchains. The NEAR protocol crew is making this a core goal of their ongoing work.

There are two causes to do that:

- In case you can make “reliable black-box AIs” by operating the coaching and inference course of utilizing some mixture of blockchains and MPC, then plenty of functions the place customers are nervous in regards to the system being biased or dishonest them may gain advantage from it. Many individuals have expressed a want for democratic governance of systemically-important AIs that we are going to rely on; cryptographic and blockchain-based methods could possibly be a path towards doing that.

- From an AI security perspective, this might be a method to create a decentralized AI that additionally has a pure kill swap, and which may restrict queries that search to make use of the AI for malicious conduct.

Additionally it is value noting that “utilizing crypto incentives to incentivize making higher AI” may be finished with out additionally taking place the complete rabbit gap of utilizing cryptography to fully encrypt it: approaches like BitTensor fall into this class.

Conclusions

Now that each blockchains and AIs have gotten extra highly effective, there’s a rising variety of use instances within the intersection of the 2 areas. Nonetheless, a few of these use instances make rather more sense and are rather more strong than others. Generally, use instances the place the underlying mechanism continues to be designed roughly as earlier than, however the person gamers turn into AIs, permitting the mechanism to successfully function at a way more micro scale, are probably the most instantly promising and the simplest to get proper.

Essentially the most difficult to get proper are functions that try to make use of blockchains and cryptographic methods to create a “singleton”: a single decentralized trusted AI that some utility would depend on for some function. These functions have promise, each for performance and for bettering AI security in a method that avoids the centralization dangers related to extra mainstream approaches to that downside. However there are additionally some ways by which the underlying assumptions may fail; therefore, it’s value treading rigorously, particularly when deploying these functions in high-value and high-risk contexts.

I sit up for seeing extra makes an attempt at constructive use instances of AI in all of those areas, so we will see which ones are actually viable at scale.

Writer: Vitalik Buterin

- search engine optimization Powered Content material & PR Distribution. Get Amplified Right this moment.

- PlatoData.Community Vertical Generative Ai. Empower Your self. Entry Right here.

- PlatoAiStream. Web3 Intelligence. Information Amplified. Entry Right here.

- PlatoESG. Carbon, CleanTech, Power, Surroundings, Photo voltaic, Waste Administration. Entry Right here.

- PlatoHealth. Biotech and Medical Trials Intelligence. Entry Right here.

- BlockOffsets. Modernizing Environmental Offset Possession. Entry Right here.

- Supply: Plato Information Intelligence.