Picture by Freepik

Conversational AI refers to digital brokers and chatbots that mimic human interactions and might have interaction human beings in dialog. Utilizing conversational AI is quick turning into a lifestyle – from asking Alexa to “discover the closest restaurant” to asking Siri to “create a reminder,” digital assistants and chatbots are sometimes used to reply customers’ questions, resolve complaints, make reservations, and rather more.

Growing these digital assistants requires substantial effort. Nevertheless, understanding and addressing the important thing challenges can streamline the event course of. I’ve used my first-hand expertise in making a mature chatbot for a recruitment platform as a reference level to clarify key challenges and their corresponding options.

To construct a conversational AI chatbot, builders can use frameworks like RASA, Amazon’s Lex, or Google’s Dialogflow to construct chatbots. Most want RASA once they plan customized adjustments or the bot is within the mature stage as it’s an open-source framework. Different frameworks are additionally appropriate as a place to begin.

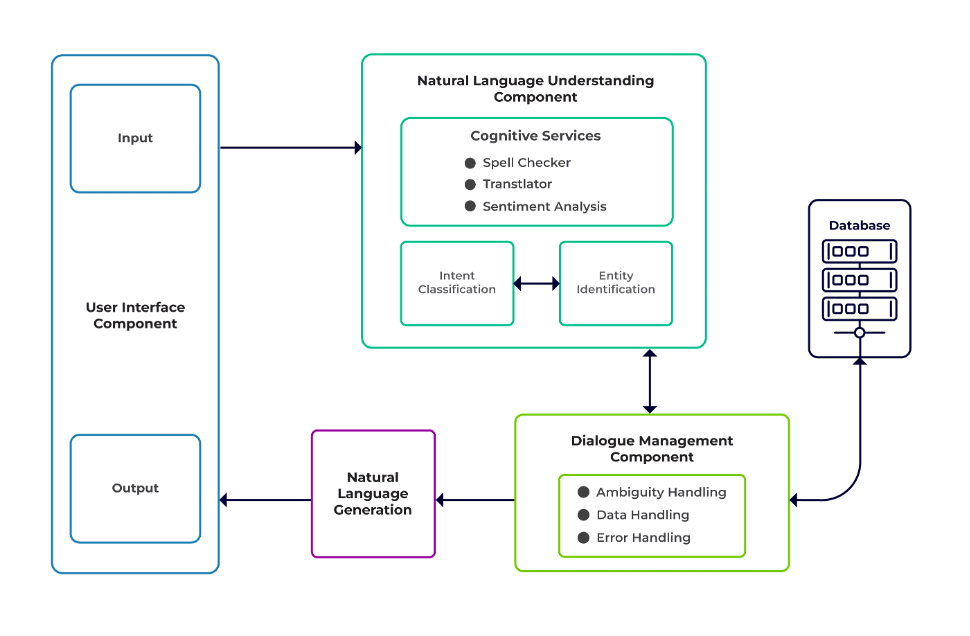

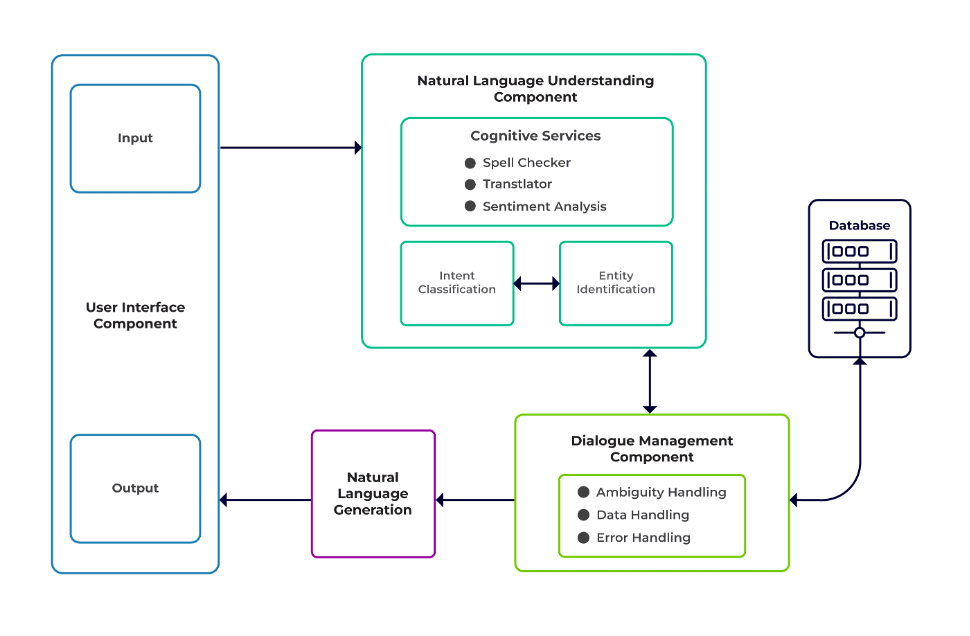

The challenges could be labeled as three main elements of a chatbot.

Pure Language Understanding (NLU) is the power of a bot to grasp human dialogue. It performs intent classification, entity extraction, and retrieving responses.

Dialogue Supervisor is accountable for a set of actions to be carried out primarily based on the present and former set of person inputs. It takes intent and entities as enter (as a part of the earlier dialog) and identifies the subsequent response.

Pure Language Technology (NLG) is the method of producing written or spoken sentences from given knowledge. It frames the response, which is then offered to the person.

Picture from Talentica Software program

Inadequate knowledge

When builders exchange FAQs or different help methods with a chatbot, they get an honest quantity of coaching knowledge. However the identical doesn’t occur once they create the bot from scratch. In such instances, builders generate coaching knowledge synthetically.

What to do?

A template-based knowledge generator can generate an honest quantity of person queries for coaching. As soon as the chatbot is prepared, mission house owners can expose it to a restricted variety of customers to reinforce coaching knowledge and improve it over a interval.

Unfitting mannequin choice

Acceptable mannequin choice and coaching knowledge are essential to get one of the best intent and entity extraction outcomes. Builders normally prepare chatbots in a selected language and area, and a lot of the obtainable pre-trained fashions are sometimes domain-specific and skilled in a single language.

There could be instances of combined languages as properly the place individuals are polyglot. They may enter queries in a combined language. As an example, in a French-dominated area, folks could use a sort of English that may be a mixture of each French and English.

What to do?

Utilizing fashions skilled in a number of languages might scale back the issue. A pre-trained mannequin like LaBSE (Language-agnostic Bert sentence embedding) could be useful in such instances. LaBSE is skilled in additional than 109 languages on a sentence similarity job. The mannequin already is aware of related phrases in a special language. In our mission, it labored very well.

Improper entity extraction

Chatbots require entities to determine what sort of knowledge the person is looking out. These entities embody time, place, particular person, merchandise, date, and many others. Nevertheless, bots can fail to determine an entity from pure language:

Identical context however completely different entities. As an example, bots can confuse a spot as an entity when a person sorts “Title of scholars from IIT Delhi” after which “Title of scholars from Bengaluru.”

Situations the place the entities are mispredicted with low confidence. For instance, a bot can determine IIT Delhi as a metropolis with low confidence.

Partial entity extraction by machine studying mannequin. If a person sorts “college students from IIT Delhi,” the mannequin can solely determine “IIT” solely as an entity as an alternative of “IIT Delhi.”

Single-word inputs having no context can confuse the machine studying fashions. For instance, a phrase like “Rishikesh” can imply each the identify of an individual in addition to a metropolis.

What to do?

Including extra coaching examples could possibly be an answer. However there’s a restrict after which including extra wouldn’t assist. Furthermore, it’s an limitless course of. One other answer could possibly be to outline regex patterns utilizing pre-defined phrases to assist extract entities with a identified set of potential values, like metropolis, nation, and many others.

Fashions share decrease confidence each time they don’t seem to be positive about entity prediction. Builders can use this as a set off to name a customized part that may rectify the low-confident entity. Let’s contemplate the above instance. If IIT Delhi is predicted as a metropolis with low confidence, then the person can at all times seek for it within the database. After failing to seek out the expected entity within the Metropolis desk, the mannequin would proceed to different tables and, ultimately, discover it within the Institute desk, leading to entity correction.

Incorrect intent classification

Each person message has some intent related to it. Since intents derive the subsequent course of actions of a bot, appropriately classifying person queries with intent is essential. Nevertheless, builders should determine intents with minimal confusion throughout intents. In any other case, there could be instances bugged by confusion. For instance, “Present me open positions” vs. “Present me open place candidates”.

What to do?

There are two methods to distinguish complicated queries. Firstly, a developer can introduce sub-intent. Secondly, fashions can deal with queries primarily based on entities recognized.

A website-specific chatbot must be a closed system the place it ought to clearly determine what it’s able to and what it isn’t. Builders should do the event in phases whereas planning for domain-specific chatbots. In every part, they will determine the chatbot’s unsupported options (by way of unsupported intent).

They’ll additionally determine what the chatbot can’t deal with in “out of scope” intent. However there could possibly be instances the place the bot is confused w.r.t unsupported and out-of-scope intent. For such eventualities, a fallback mechanism must be in place the place, if the intent confidence is under a threshold, the mannequin can work gracefully with a fallback intent to deal with confusion instances.

As soon as the bot identifies the intent of a person’s message, it should ship a response again. Bot decides the response primarily based on a sure set of outlined guidelines and tales. For instance, a rule could be so simple as utter “good morning” when the person greets “Hello”. Nevertheless, most frequently, conversations with chatbots comprise follow-up interplay, and their responses rely upon the general context of the dialog.

What to do?

To deal with this, chatbots are fed with actual dialog examples referred to as Tales. Nevertheless, customers don’t at all times work together as meant. A mature chatbot ought to deal with all such deviations gracefully. Designers and builders can assure this in the event that they don’t simply concentrate on a contented path whereas writing tales but in addition work on sad paths.

Person engagement with chatbots rely closely on the chatbot responses. Customers would possibly lose curiosity if the responses are too robotic or too acquainted. As an example, a person could not like a solution like “You’ve gotten typed a unsuitable question” for a unsuitable enter regardless that the response is appropriate. The reply right here doesn’t match the persona of an assistant.

What to do?

The chatbot serves as an assistant and may possess a selected persona and tone of voice. They need to be welcoming and humble, and builders ought to design conversations and utterances accordingly. The responses mustn’t sound robotic or mechanical. As an example, the bot might say, “Sorry, it looks like I don’t have any particulars. May you please re-type your question?” to handle a unsuitable enter.

LLM (Massive Language Mannequin) primarily based chatbots like ChatGPT and Bard are game-changing improvements and have improved the capabilities of conversational AIs. They aren’t solely good at making open-ended human-like conversations however can carry out completely different duties like textual content summarization, paragraph writing, and many others., which could possibly be earlier achieved solely by particular fashions.

One of many challenges with conventional chatbot methods is categorizing every sentence into intents and deciding the response accordingly. This method isn’t sensible. Responses like “Sorry, I couldn’t get you” are sometimes irritating. Intentless chatbot methods are the best way ahead, and LLMs could make this a actuality.

LLMs can simply obtain state-of-the-art outcomes on the whole named entity recognition barring sure domain-specific entity recognition. A combined method to utilizing LLMs with any chatbot framework can encourage a extra mature and sturdy chatbot system.

With the most recent developments and steady analysis in conversational AI, chatbots are getting higher day by day. Areas like dealing with advanced duties with a number of intents, comparable to “Ebook a flight to Mumbai and organize for a cab to Dadar,” are getting a lot consideration.

Quickly personalised conversations will happen primarily based on the traits of the person to maintain the person engaged. For instance, if a bot finds the person is sad, it redirects the dialog to an actual agent. Moreover, with ever-increasing chatbot knowledge, deep studying methods like ChatGPT can robotically generate responses for queries utilizing a information base.

Suman Saurav is a Knowledge Scientist at Talentica Software program, a software program product improvement firm. He’s an alumnus of NIT Agartala with over 8 years of expertise designing and implementing revolutionary AI options utilizing NLP, Conversational AI, and Generative AI.