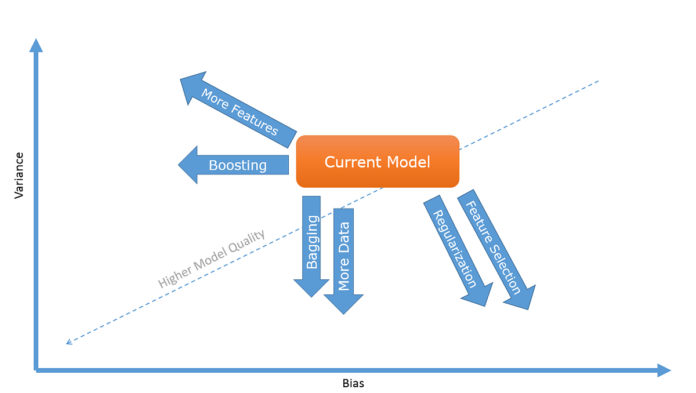

It is a determine I dug up from an previous slide deck I ready years in the past for a workshop on predictive modeling. it illustrates what I consider because the “battle horse” of mannequin tuning (‘trigger you recognize, it form of appears like a horse, with an additional spear). It is also a form of map for navigating the Bias-Variance house.

Bias and variance are the 2 parts of imprecision in predictive fashions, and on the whole there’s a trade-off between them, so usually decreasing one tends to extend the opposite. Bias in predictive fashions is a measure of mannequin rigidity andinflexibility, and implies that your mannequin shouldn’t be capturing all of the sign it may from the info. Bias is also called under-fitting. Variance however is a measure of mannequin inconsistency, excessive variance fashions are inclined to carry out very properly on some information factors and actually dangerous on others. That is also called over-fittingand implies that your mannequin is too versatile for the quantity of coaching information you’ve gotten and finally ends up selecting up noise along with the sign, studying random patterns that occur by probability and don’t generalize past your coaching information.

The only method to decide in case your mannequin is struggling extra from bias or from variance is the next rule of thumb:

In case your mannequin is performing very well on the coaching set, however a lot poorer on the hold-out set, then it’s affected by excessive variance. Alternatively in case your mannequin is performing poorly on each coaching and check information units, it’s affected by excessive bias.

Relying on the efficiency of your present mannequin and whether or not it’s struggling extra from excessive bias or excessive variance, you possibly can resort to a number of of those seven strategies to deliver your mannequin the place you need it to be:

- Add Extra Information! In fact! That is virtually at all times a good suggestion in the event you can afford it. It drives variance down (with out a trade-off in bias) and permits you to use extra versatile fashions.

- Add Extra Options! That is virtually at all times a good suggestion too. Once more, in the event you can afford it. Including new options will increase mannequin flexibility and decreases bias(on the expense of variance). The one time when it’s not a good suggestion so as to add new options is when your information set is small by way of information factors and you may’t spend money on #1 above.

- Do Characteristic Choice. Nicely, … solely do it when you have lots of options and never sufficient information factors. Characteristic choice is nearly the inverse of #2 above, and pulls your mannequin in the other way (lowering variance on the expense of some bias) however the trade-off could be good in the event you do the characteristic choice methodically and solely take away noisy and in-informative options. If in case you have sufficient information, most fashions can mechanically deal with noisy and uninformative options and also you don’t must do express characteristic choice. These days of “Large Information” the necessity for express characteristic choice not often arises. Additionally it is price noting that correct characteristic choice is non-trivial and computationally intensive.

- Use Regularization. That is the neater model of #3 and quantities to implicit characteristic choice. The specifics are past the scope for this submit, however regularization tells your algorithm to attempt to use as few options as potential, or to not belief any single characteristic an excessive amount of. Regularization depends on good implementations of coaching algorithms and is normally the a lot most well-liked model of characteristic choice.

- Bagging is brief for Bootstrap Aggregation. It makes use of a number of variations of the identical mannequin skilled on barely totally different samples of the coaching information to cut back variance with none noticeable impact on bias. Bagging may very well be computationally intensive esp. by way of reminiscence.

- Boosting is a barely extra sophisticated idea and depends on coaching a number of fashions successively every making an attempt to be taught from the errors of the fashions previous it. Boosting decreases bias and hardly impacts variance (until you might be very sloppy). Once more the worth is computation time and reminiscence dimension.

- Use a extra totally different class of fashions! In fact you don’t need to do all of the above if there’s one other sort of fashions that’s extra appropriate to your information set out-of-the-box. Altering the mannequin class (e.g. from linear mannequin to neural community) strikes you to a unique level within the house above. Some algorithms are simply higher suited to some information units than others. Figuring out the suitable sort of fashions may very well be actually tough although!

It ought to be famous although that mannequin accuracy (being as far to the underside left as potential) shouldn’t be the one goal. Some extremely correct fashions may very well be very arduous to deploy in manufacturing environments and are normally black packing containers which might be very arduous to interpret or debug, so many manufacturing programs go for less complicated, much less correct mannequin which might be much less resource-intensive, simpler to deploy and debug.