Creating scalable and environment friendly machine studying (ML) pipelines is essential for streamlining the event, deployment, and administration of ML fashions. On this put up, we current a framework for automating the creation of a directed acyclic graph (DAG) for Amazon SageMaker Pipelines primarily based on easy configuration recordsdata. The framework code and examples introduced right here solely cowl mannequin coaching pipelines, however may be readily prolonged to batch inference pipelines as effectively.

This dynamic framework makes use of configuration recordsdata to orchestrate preprocessing, coaching, analysis, and registration steps for each single-model and multi-model use circumstances primarily based on user-defined Python scripts, infrastructure wants (together with Amazon Digital Non-public Cloud (Amazon VPC) subnets and safety teams, AWS Identification and Entry Administration (IAM) roles, AWS Key Administration Service (AWS KMS) keys, containers registry, and occasion varieties), enter and output Amazon Easy Storage Service (Amazon S3) paths, and useful resource tags. Configuration recordsdata (YAML and JSON) permit ML practitioners to specify undifferentiated code for orchestrating coaching pipelines utilizing declarative syntax. This allows information scientists to rapidly construct and iterate on ML fashions, and empowers ML engineers to run via steady integration and steady supply (CI/CD) ML pipelines quicker, reducing time to manufacturing for fashions.

Answer overview

The proposed framework code begins by studying the configuration recordsdata. It then dynamically creates a SageMaker Pipelines DAG primarily based on the steps declared within the configuration recordsdata and the interactions and dependencies amongst steps. This orchestration framework caters to each single-model and multi-model use circumstances, and gives a clean circulation of information and processes. The next are the important thing advantages of this resolution:

- Automation – Your entire ML workflow, from information preprocessing to mannequin registry, is orchestrated with no handbook intervention. This reduces the effort and time required for mannequin experimentation and operationalization.

- Reproducibility – With a predefined configuration file, information scientists and ML engineers can reproduce all the workflow, reaching constant outcomes throughout a number of runs and environments.

- Scalability – Amazon SageMaker is used all through the pipeline, enabling ML practitioners to course of massive datasets and practice advanced fashions with out infrastructure considerations.

- Flexibility – The framework is versatile and might accommodate a variety of ML use circumstances, ML frameworks (reminiscent of XGBoost and TensorFlow), multi-model coaching, and multi-step coaching. Each step of the coaching DAG may be custom-made through the configuration file.

- Mannequin governance – The Amazon SageMaker Mannequin Registry integration permits for monitoring mannequin variations, and due to this fact selling them to manufacturing with confidence.

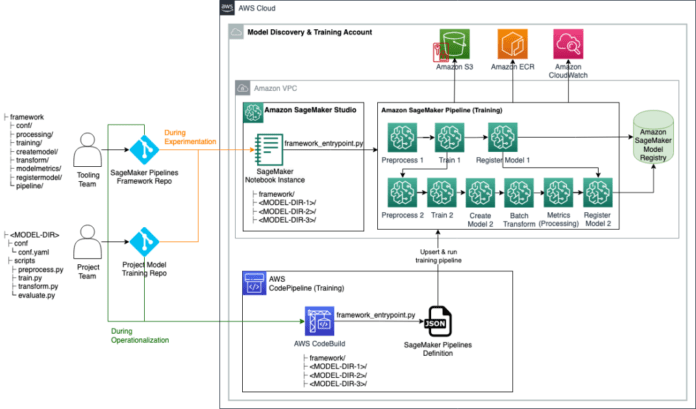

The next structure diagram depicts how you should use the proposed framework throughout each experimentation and operationalization of ML fashions. Throughout experimentation, you may clone the framework code repository supplied on this put up and your project-specific supply code repositories into Amazon SageMaker Studio, and set your digital atmosphere (detailed later on this put up). You’ll be able to then iterate on preprocessing, coaching, and analysis scripts, in addition to configuration decisions. To create and run a SageMaker Pipelines coaching DAG, you may name the framework’s entry level, which is able to learn all of the configuration recordsdata, create the required steps, and orchestrate them primarily based on the desired step ordering and dependencies.

Throughout operationalization, the CI pipeline clones the framework code repository and project-specific coaching repositories into an AWS CodeBuild job, the place the framework’s entry level script is known as to create or replace the SageMaker Pipelines coaching DAG, after which run it.

Repository construction

The GitHub repository accommodates the next directories and recordsdata:

- /framework/conf/ – This listing accommodates a configuration file that’s used to set widespread variables throughout all modeling items reminiscent of subnets, safety teams, and IAM position on the runtime. A modeling unit is a sequence of as much as six steps for coaching an ML mannequin.

- /framework/createmodel/ – This listing accommodates a Python script that creates a SageMaker mannequin object primarily based on mannequin artifacts from a SageMaker Pipelines coaching step. The mannequin object is later utilized in a SageMaker batch remodel job for evaluating mannequin efficiency on a take a look at set.

- /framework/modelmetrics/ – This listing accommodates a Python script that creates an Amazon SageMaker Processing job for producing a mannequin metrics JSON report for a educated mannequin primarily based on outcomes of a SageMaker batch remodel job carried out on take a look at information.

- /framework/pipeline/ – This listing accommodates Python scripts that use Python lessons outlined in different framework directories to create or replace a SageMaker Pipelines DAG primarily based on the desired configurations. The model_unit.py script is utilized by pipeline_service.py to create a number of modeling items. Every modeling unit is a sequence of as much as six steps for coaching an ML mannequin: course of, practice, create mannequin, remodel, metrics, and register mannequin. Configurations for every modeling unit needs to be specified within the mannequin’s respective repository. The pipeline_service.py additionally units dependencies amongst SageMaker Pipelines steps (how steps inside and throughout modeling items are sequenced or chained) primarily based on the sagemakerPipeline part, which needs to be outlined within the configuration file of one of many mannequin repositories (the anchor mannequin). This lets you override default dependencies inferred by SageMaker Pipelines. We focus on the configuration file construction later on this put up.

- /framework/processing/ – This listing accommodates a Python script that creates a SageMaker Processing job primarily based on the desired Docker picture and entry level script.

- /framework/registermodel/ – This listing accommodates a Python script for registering a educated mannequin together with its calculated metrics in SageMaker Mannequin Registry.

- /framework/coaching/ – This listing accommodates a Python script that creates a SageMaker coaching job.

- /framework/remodel/ – This listing accommodates a Python script that creates a SageMaker batch remodel job. Within the context of mannequin coaching, that is used to calculate the efficiency metric of a educated mannequin on take a look at information.

- /framework/utilities/ – This listing accommodates utility scripts for studying and becoming a member of configuration recordsdata, in addition to logging.

- /framework_entrypoint.py – This file is the entry level of the framework code. It calls a perform outlined within the /framework/pipeline/ listing to create or replace a SageMaker Pipelines DAG and run it.

- /examples/ – This listing accommodates a number of examples of how you should use this automation framework to create easy and sophisticated coaching DAGs.

- /env.env – This file means that you can set widespread variables reminiscent of subnets, safety teams, and IAM position as atmosphere variables.

- /necessities.txt – This file specifies Python libraries which can be required for the framework code.

Conditions

It’s best to have the next conditions earlier than deploying this resolution:

- An AWS account

- SageMaker Studio

- A SageMaker position with Amazon S3 learn/write and AWS KMS encrypt/decrypt permissions

- An S3 bucket for storing information, scripts, and mannequin artifacts

- Optionally, the AWS Command Line Interface (AWS CLI)

- Python3 (Python 3.7 or larger) and the next Python packages:

- Extra Python packages utilized in your customized scripts

Deploy the answer

Full the next steps to deploy the answer:

- Set up your mannequin coaching repository in accordance with the next construction:

- Clone the framework code and your mannequin supply code from the Git repositories:

-

- Clone

dynamic-sagemaker-pipelines-frameworkrepo right into a coaching listing. Within the following code, we assume the coaching listing is known asaws-train: - Clone the mannequin supply code below the identical listing. For multi-model coaching, repeat this step for as many fashions as you want to practice.

- Clone

For single-model coaching, your listing ought to appear to be the next:

For multi-model coaching, your listing ought to appear to be the next:

- Arrange the next atmosphere variables. Asterisks point out atmosphere variables which can be required; the remainder are elective.

| Setting Variable | Description |

SMP_ACCOUNTID* |

AWS account the place the SageMaker pipeline is run |

SMP_REGION* |

AWS Area the place the SageMaker pipeline is run |

SMP_S3BUCKETNAME* |

S3 bucket title |

SMP_ROLE* |

SageMaker position |

SMP_MODEL_CONFIGPATH* |

Relative path of the of single-model or multi-model configuration recordsdata |

SMP_SUBNETS |

Subnet IDs for SageMaker networking configuration |

SMP_SECURITYGROUPS |

Safety group IDs for SageMaker networking configuration |

For single-model use circumstances, SMP_MODEL_CONFIGPATH might be <MODEL-DIR>/conf/conf.yaml. For multi-model use circumstances, SMP_MODEL_CONFIGPATH might be */conf/conf.yaml, which lets you discover all conf.yaml recordsdata utilizing Python’s glob module and mix them to kind a world configuration file. Throughout experimentation (native testing), you may specify atmosphere variables contained in the env.env file after which export them by working the next command in your terminal:

Observe that the values of atmosphere variables in env.env needs to be positioned inside citation marks (for instance, SMP_REGION="us-east-1"). Throughout operationalization, these atmosphere variables needs to be set by the CI pipeline.

- Create and activate a digital atmosphere by working the next instructions:

- Set up the required Python packages by working the next command:

- Edit your mannequin coaching

conf.yamlrecordsdata. We focus on the configuration file construction within the subsequent part. - From the terminal, name the framework’s entry level to create or replace and run the SageMaker Pipeline coaching DAG:

- View and debug the SageMaker Pipelines run on the Pipelines tab of the SageMaker Studio UI.

Configuration file construction

There are two forms of configuration recordsdata within the proposed resolution: framework configuration and mannequin configuration. On this part, we describe every intimately.

Framework configuration

The /framework/conf/conf.yaml file units the variables which can be widespread throughout all modeling items. This contains SMP_S3BUCKETNAME, SMP_ROLE, SMP_MODEL_CONFIGPATH, SMP_SUBNETS, SMP_SECURITYGROUPS, and SMP_MODELNAME. Check with Step 3 of deployment directions for descriptions of those variables and learn how to set them through atmosphere variables.

Mannequin configuration

For every mannequin within the mission, we have to specify the next within the <MODEL-DIR>/conf/conf.yaml file (asterisks point out required sections; the remainder are elective):

- /conf/fashions* – On this part, you may configure a number of modeling items. When the framework code is run, it is going to routinely learn all configuration recordsdata throughout runtime and append them to the config tree. Theoretically, you may specify all modeling items in the identical

conf.yamlfile, however it’s beneficial to specify every modeling unit configuration in its respective listing or Git repository to attenuate errors. The items are as follows:- {model-name}* – The title of the mannequin.

- source_directory* – A typical

source_dirpath to make use of for all steps throughout the modeling unit. - preprocess – This part specifies preprocessing parameters.

- practice* – This part specifies coaching job parameters.

- remodel* – This part specifies SageMaker Remodel job parameters for making predictions on the take a look at information.

- consider – This part specifies SageMaker Processing job parameters for producing a mannequin metrics JSON report for the educated mannequin.

- registry* – This part specifies parameters for registering the educated mannequin in SageMaker Mannequin Registry.

- /conf/sagemakerPipeline* – This part defines the SageMaker Pipelines circulation, together with dependencies amongst steps. For single-model use circumstances, this part is outlined on the finish of the configuration file. For multi-model use circumstances, the

sagemakerPipelinepart solely must be outlined within the configuration file of one of many fashions (any of the fashions). We check with this mannequin because the anchor mannequin. The parameters are as follows:- pipelineName* – Title of the SageMaker pipeline.

- fashions* – Nested listing of modeling items:

- {model-name}* – Mannequin identifier, which ought to match a {model-name} identifier within the /conf/fashions part.

- steps* –

- step_name* – Step title to be displayed within the SageMaker Pipelines DAG.

- step_class* – (Union[Processing, Training, CreateModel, Transform, Metrics, RegisterModel])

- step_type* – This parameter is just required for preprocessing steps, for which it needs to be set to preprocess. That is wanted to tell apart preprocess and consider steps, each of which have a

step_classof Processing. - enable_cache – ([Union[True, False]]). This means whether or not to allow SageMaker Pipelines caching for this step.

- chain_input_source_step – ([list[step_name]]). You need to use this to set the channel outputs of one other step as enter to this step.

- chain_input_additional_prefix – That is solely allowed for steps of the Remodel

step_class, and can be utilized along withchain_input_source_stepparameter to pinpoint the file that needs to be used because the enter to the remodel step.

- steps* –

- {model-name}* – Mannequin identifier, which ought to match a {model-name} identifier within the /conf/fashions part.

- dependencies – This part specifies the sequence by which the SageMaker Pipelines steps needs to be run. We’ve got tailored the Apache Airflow notation for this part (for instance,

{step_name} >> {step_name}). If this part is left clean, express dependencies specified by thechain_input_source_stepparameter or implicit dependencies outline the SageMaker Pipelines DAG circulation.

Observe that we suggest having one coaching step per modeling unit. If a number of coaching steps are outlined for a modeling unit, the following steps implicitly take the final coaching step to create the mannequin object, calculate metrics, and register the mannequin. If you want to practice a number of fashions, it’s beneficial to create a number of modeling items.

Examples

On this part, we reveal three examples of ML mannequin coaching DAGs created utilizing the introduced framework.

Single-model coaching: LightGBM

It is a single-model instance for a classification use case the place we use LightGBM in script mode on SageMaker. The dataset consists of categorical and numerical variables to foretell the binary label Income (to foretell if the topic makes a purchase order or not). The preprocessing script is used to mannequin the information for coaching and testing after which stage it in an S3 bucket. The S3 paths are then supplied to the coaching step within the configuration file.

When the coaching step runs, SageMaker masses the file on the container at /decide/ml/enter/information/{channelName}/, accessible through the atmosphere variable SM_CHANNEL_{channelName} on the container (channelName= ‘practice’ or ‘take a look at’).The coaching script does the next:

- Load the recordsdata regionally from native container paths utilizing the NumPy load module.

- Set hyperparameters for the coaching algorithm.

- Save the educated mannequin on the native container path

/decide/ml/mannequin/.

SageMaker takes the content material below /decide/ml/mannequin/ to create a tarball that’s used to deploy the mannequin to SageMaker for internet hosting.

The remodel step takes as enter the staged take a look at file as enter and the educated mannequin to make predictions on the educated mannequin. The output of the remodel step is chained to the metrics step to judge the mannequin towards the floor reality, which is explicitly equipped to the metrics step. Lastly, the output of the metrics step is implicitly chained to the register step to register the mannequin in SageMaker Mannequin Registry with details about the mannequin’s efficiency produced within the metrics step. The next determine reveals a visible illustration of the coaching DAG. You’ll be able to check with the scripts and configuration file for this instance within the GitHub repo.

Single-model coaching: LLM fine-tuning

That is one other single-model coaching instance, the place we orchestrate fine-tuning of a Falcon-40B massive language mannequin (LLM) from Hugging Face Hub for a textual content summarization use case. The preprocessing script masses the samsum dataset from Hugging Face, masses the tokenizer for the mannequin, and processes the practice/take a look at information splits for fine-tuning the mannequin on this area information within the falcon-text-summarization-preprocess step.

The output is chained to the falcon-text-summarization-tuning step, the place the coaching script masses the Falcon-40B LLM from Hugging Face Hub and begins accelerated fine-tuning utilizing LoRA on the practice break up. The mannequin is evaluated in the identical step after fine-tuning, which gatekeeps the analysis loss to fail the falcon-text-summarization-tuning step, which causes the SageMaker pipeline to cease earlier than it is ready to register the fine-tuned mannequin. In any other case, the falcon-text-summarization-tuning step runs efficiently and the mannequin is registered in SageMaker Mannequin Registry. The next determine reveals a visible illustration of the LLM fine-tuning DAG. The scripts and configuration file for this instance can be found within the GitHub repo.

Multi-model coaching

It is a multi-model coaching instance the place a principal part evaluation (PCA) mannequin is educated for dimensionality discount, and a TensorFlow Multilayer Perceptron mannequin is educated for California Housing Value prediction. The TensorFlow mannequin’s preprocessing step makes use of a educated PCA mannequin to scale back dimensionality of its coaching information. We add a dependency within the configuration to make sure the TensorFlow mannequin is registered after PCA mannequin registration. The next determine reveals a visible illustration of the multi-model coaching DAG instance. The scripts and configuration recordsdata for this instance can be found within the GitHub repo.

Clear up

Full the next steps to scrub up your sources:

- Use the AWS CLI to listing and take away any remaining pipelines which can be created by the Python scripts.

- Optionally, delete different AWS sources such because the S3 bucket or IAM position created exterior SageMaker Pipelines.

Conclusion

On this put up, we introduced a framework for automating SageMaker Pipelines DAG creation primarily based on configuration recordsdata. The proposed framework affords a forward-looking resolution to the problem of orchestrating advanced ML workloads. By utilizing a configuration file, SageMaker Pipelines gives the flexibleness to construct orchestration with minimal code, so you may streamline the method of making and managing each single-model and multi-model pipelines. This method not solely saves time and sources, but in addition promotes MLOps greatest practices, contributing to the general success of ML initiatives. For extra details about implementation particulars, overview the GitHub repo.

Concerning the Authors

Luis Felipe Yepez Barrios, is a Machine Studying Engineer with AWS Skilled Providers, centered on scalable distributed programs and automation tooling to expedite scientific innovation within the discipline of Machine Studying (ML). Moreover, he assists enterprise purchasers in optimizing their machine studying options via AWS providers.

Luis Felipe Yepez Barrios, is a Machine Studying Engineer with AWS Skilled Providers, centered on scalable distributed programs and automation tooling to expedite scientific innovation within the discipline of Machine Studying (ML). Moreover, he assists enterprise purchasers in optimizing their machine studying options via AWS providers.

Jinzhao Feng, is a Machine Studying Engineer at AWS Skilled Providers. He focuses on architecting and implementing massive scale Generative AI and classical ML pipeline options. He’s specialised in FMOps, LLMOps and distributed coaching.

Jinzhao Feng, is a Machine Studying Engineer at AWS Skilled Providers. He focuses on architecting and implementing massive scale Generative AI and classical ML pipeline options. He’s specialised in FMOps, LLMOps and distributed coaching.

Harsh Asnani, is a Machine Studying Engineer at AWS. His Background is in Utilized Knowledge Science with a give attention to operationalizing Machine Studying workloads within the cloud at scale.

Harsh Asnani, is a Machine Studying Engineer at AWS. His Background is in Utilized Knowledge Science with a give attention to operationalizing Machine Studying workloads within the cloud at scale.

Hasan Shojaei, is a Sr. Knowledge Scientist with AWS Skilled Providers, the place he helps clients throughout completely different industries clear up their enterprise challenges via the usage of large information, machine studying, and cloud applied sciences. Previous to this position, Hasan led a number of initiatives to develop novel physics-based and data-driven modeling methods for prime vitality firms. Exterior of labor, Hasan is keen about books, mountain climbing, images, and historical past.

Hasan Shojaei, is a Sr. Knowledge Scientist with AWS Skilled Providers, the place he helps clients throughout completely different industries clear up their enterprise challenges via the usage of large information, machine studying, and cloud applied sciences. Previous to this position, Hasan led a number of initiatives to develop novel physics-based and data-driven modeling methods for prime vitality firms. Exterior of labor, Hasan is keen about books, mountain climbing, images, and historical past.

Alec Jenab, is a Machine Studying Engineer who focuses on creating and operationalizing machine studying options at scale for enterprise clients. Alec is keen about bringing progressive options to market, particularly in areas the place machine studying can meaningfully enhance finish person expertise. Exterior of labor, he enjoys enjoying basketball, snowboarding, and discovering hidden gems in San Francisco.

Alec Jenab, is a Machine Studying Engineer who focuses on creating and operationalizing machine studying options at scale for enterprise clients. Alec is keen about bringing progressive options to market, particularly in areas the place machine studying can meaningfully enhance finish person expertise. Exterior of labor, he enjoys enjoying basketball, snowboarding, and discovering hidden gems in San Francisco.