Generative AI brokers are able to producing human-like responses and fascinating in pure language conversations by orchestrating a series of calls to basis fashions (FMs) and different augmenting instruments based mostly on consumer enter. As a substitute of solely fulfilling predefined intents by a static resolution tree, brokers are autonomous inside the context of their suite of accessible instruments. Amazon Bedrock is a completely managed service that makes main FMs from AI firms obtainable by an API together with developer tooling to assist construct and scale generative AI purposes.

On this put up, we reveal the best way to construct a generative AI monetary providers agent powered by Amazon Bedrock. The agent can help customers with discovering their account info, finishing a mortgage software, or answering pure language questions whereas additionally citing sources for the offered solutions. This answer is meant to behave as a launchpad for builders to create their very own personalised conversational brokers for varied purposes, reminiscent of digital staff and buyer assist programs. Resolution code and deployment property might be discovered within the GitHub repository.

Amazon Lex provides the pure language understanding (NLU) and pure language processing (NLP) interface for the open supply LangChain conversational agent embedded inside an AWS Amplify web site. The agent is supplied with instruments that embody an Anthropic Claude 2.1 FM hosted on Amazon Bedrock and artificial buyer knowledge saved on Amazon DynamoDB and Amazon Kendra to ship the next capabilities:

- Present personalised responses – Question DynamoDB for buyer account info, reminiscent of mortgage abstract particulars, due steadiness, and subsequent cost date

- Entry normal data – Harness the agent’s reasoning logic in tandem with the huge quantities of information used to pre-train the totally different FMs offered by Amazon Bedrock to provide replies for any buyer immediate

- Curate opinionated solutions – Inform agent responses utilizing an Amazon Kendra index configured with authoritative knowledge sources: buyer paperwork saved in Amazon Easy Storage Service (Amazon S3) and Amazon Kendra Net Crawler configured for the shopper’s web site

Resolution overview

Demo recording

The next demo recording highlights agent performance and technical implementation particulars.

Resolution structure

The next diagram illustrates the answer structure.

The agent’s response workflow contains the next steps:

- Customers carry out pure language dialog with the agent by their selection of internet, SMS, or voice channels. The online channel contains an Amplify hosted web site with an Amazon Lex embedded chatbot for a fictitious buyer. SMS and voice channels might be optionally configured utilizing Amazon Join and messaging integrations for Amazon Lex. Every consumer request is processed by Amazon Lex to find out consumer intent by a course of known as intent recognition, which includes analyzing and decoding the consumer’s enter (textual content or speech) to know the consumer’s supposed motion or goal.

- Amazon Lex then invokes an AWS Lambda handler for consumer intent success. The Lambda operate related to the Amazon Lex chatbot comprises the logic and enterprise guidelines required to course of the consumer’s intent. Lambda performs particular actions or retrieves info based mostly on the consumer’s enter, making choices and producing applicable responses.

- Lambda devices the monetary providers agent logic as a LangChain conversational agent that may entry customer-specific knowledge saved on DynamoDB, curate opinionated responses utilizing your paperwork and webpages listed by Amazon Kendra, and supply normal data solutions by the FM on Amazon Bedrock. Responses generated by Amazon Kendra embody supply attribution, demonstrating how one can present extra contextual info to the agent by Retrieval Augmented Technology (RAG). RAG means that you can improve your agent’s skill to generate extra correct and contextually related responses utilizing your individual knowledge.

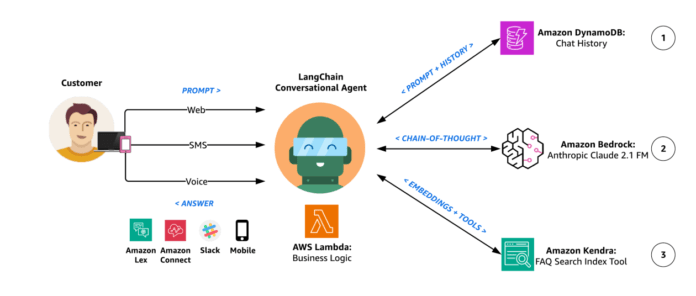

Agent structure

The next diagram illustrates the agent structure.

The agent’s reasoning workflow contains the next steps:

- The LangChain conversational agent incorporates dialog reminiscence so it could possibly reply to a number of queries with contextual era. This reminiscence permits the agent to offer responses that take note of the context of the continued dialog. That is achieved by contextual era, the place the agent generates responses which are related and contextually applicable based mostly on the knowledge it has remembered from the dialog. In less complicated phrases, the agent remembers what was mentioned earlier and makes use of that info to answer a number of questions in a approach that is smart within the ongoing dialogue. Our agent makes use of LangChain’s DynamoDB chat message historical past class as a dialog reminiscence buffer so it could possibly recall previous interactions and improve the consumer expertise with extra significant, context-aware responses.

- The agent makes use of Anthropic Claude 2.1 on Amazon Bedrock to finish the specified job by a collection of rigorously self-generated textual content inputs often known as prompts. The first goal of immediate engineering is to elicit particular and correct responses from the FM. Totally different immediate engineering methods embody:

- Zero-shot – A single query is introduced to the mannequin with none extra clues. The mannequin is predicted to generate a response based mostly solely on the given query.

- Few-shot – A set of pattern questions and their corresponding solutions are included earlier than the precise query. By exposing the mannequin to those examples, it learns to reply in the same method.

- Chain-of-thought – A selected model of few-shot prompting the place the immediate is designed to include a collection of intermediate reasoning steps, guiding the mannequin by a logical thought course of, finally resulting in the specified reply.

Our agent makes use of chain-of-thought reasoning by operating a set of actions upon receiving a request. Following every motion, the agent enters the commentary step, the place it expresses a thought. If a ultimate reply isn’t but achieved, the agent iterates, deciding on totally different actions to progress in direction of reaching the ultimate reply. See the next instance code:

Thought: Do I would like to make use of a device? Sure

Motion: The motion to take

Motion Enter: The enter to the motion

Remark: The results of the motion

Thought: Do I would like to make use of a device? No

FSI Agent: [answer and source documents]

- As a part of the agent’s totally different reasoning paths and self-evaluating decisions to resolve the following plan of action, it has the power to entry artificial buyer knowledge sources by an Amazon Kendra Index Retriever device. Utilizing Amazon Kendra, the agent performs contextual search throughout a variety of content material sorts, together with paperwork, FAQs, data bases, manuals, and web sites. For extra particulars on supported knowledge sources, consult with Knowledge sources. The agent has the facility to make use of this device to offer opinionated responses to consumer prompts that must be answered utilizing an authoritative, customer-provided data library, as an alternative of the extra normal data corpus used to pretrain the Amazon Bedrock FM.

Deployment information

Within the following sections, we talk about the important thing steps to deploy the answer, together with pre-deployment and post-deployment.

Pre-deployment

Earlier than you deploy the answer, it is advisable to create your individual forked model of the answer repository with a token-secured webhook to automate steady deployment of your Amplify web site. The Amplify configuration factors to a GitHub supply repository from which our web site’s frontend is constructed.

Fork and clone generative-ai-amazon-bedrock-langchain-agent-example repository

- To regulate the supply code that builds your Amplify web site, comply with the directions in Fork a repository to fork the generative-ai-amazon-bedrock-langchain-agent-example repository. This creates a replica of the repository that’s disconnected from the unique code base, so you may make the suitable modifications.

- Please notice of your forked repository URL to make use of to clone the repository within the subsequent step and to configure the GITHUB_PAT surroundings variable used within the answer deployment automation script.

- Clone your forked repository utilizing the git clone command:

Create a GitHub private entry token

The Amplify hosted web site makes use of a GitHub private entry token (PAT) because the OAuth token for third-party supply management. The OAuth token is used to create a webhook and a read-only deploy key utilizing SSH cloning.

- To create your PAT, comply with the directions in Creating a private entry token (traditional). You could desire to make use of a GitHub app to entry assets on behalf of a corporation or for long-lived integrations.

- Be aware of your PAT earlier than closing your browser—you’ll use it to configure the GITHUB_PAT surroundings variable used within the answer deployment automation script. The script will publish your PAT to AWS Secrets and techniques Supervisor utilizing AWS Command Line Interface (AWS CLI) instructions and the key identify can be used because the GitHubTokenSecretName AWS CloudFormation parameter.

Deployment

The answer deployment automation script makes use of the parameterized CloudFormation template, GenAI-FSI-Agent.yml, to automate provisioning of following answer assets:

- An Amplify web site to simulate your front-end surroundings.

- An Amazon Lex bot configured by a bot import deployment package deal.

- 4 DynamoDB tables:

- UserPendingAccountsTable – Data pending transactions (for instance, mortgage purposes).

- UserExistingAccountsTable – Incorporates consumer account info (for instance, mortgage account abstract).

- ConversationIndexTable – Tracks the dialog state.

- ConversationTable – Shops dialog historical past.

- An S3 bucket that comprises the Lambda agent handler, Lambda knowledge loader, and Amazon Lex deployment packages, together with buyer FAQ and mortgage software instance paperwork.

- Two Lambda capabilities:

- Agent handler – Incorporates the LangChain conversational agent logic that may intelligently make use of a wide range of instruments based mostly on consumer enter.

- Knowledge loader – Masses instance buyer account knowledge into UserExistingAccountsTable and is invoked as a customized CloudFormation useful resource throughout stack creation.

- A Lambda layer for Amazon Bedrock Boto3, LangChain, and pdfrw libraries. The layer provides LangChain’s FM library with an Amazon Bedrock mannequin because the underlying FM and offers pdfrw as an open supply PDF library for creating and modifying PDF recordsdata.

- An Amazon Kendra index that gives a searchable index of buyer authoritative info, together with paperwork, FAQs, data bases, manuals, web sites, and extra.

- Two Amazon Kendra knowledge sources:

- Amazon S3 – Hosts an instance buyer FAQ doc.

- Amazon Kendra Net Crawler – Configured with a root area that emulates the customer-specific web site (for instance, <your-company>.com).

- AWS Id and Entry Administration (IAM) permissions for the previous assets.

AWS CloudFormation prepopulates stack parameters with the default values offered within the template. To supply different enter values, you possibly can specify parameters as surroundings variables which are referenced within the `ParameterKey=<ParameterKey>,ParameterValue=<Worth>` pairs within the following shell script’s `aws cloudformation create-stack` command.

- Earlier than you run the shell script, navigate to your forked model of the generative-ai-amazon-bedrock-langchain-agent-example repository as your working listing and modify the shell script permissions to executable:

- Set your Amplify repository and GitHub PAT surroundings variables created in the course of the pre-deployment steps:

- Lastly, run the answer deployment automation script to deploy the answer’s assets, together with the GenAI-FSI-Agent.yml CloudFormation stack:

supply ./create-stack.sh

Resolution Deployment Automation Script

The previous supply ./create-stack.sh shell command runs the next AWS CLI instructions to deploy the answer stack:

Submit-deployment

On this part, we talk about the post-deployment steps for launching a frontend software that’s supposed to emulate the shopper’s Manufacturing software. The monetary providers agent will function as an embedded assistant inside the instance internet UI.

Launch an internet UI to your chatbot

The Amazon Lex internet UI, also referred to as the chatbot UI, means that you can rapidly provision a complete internet shopper for Amazon Lex chatbots. The UI integrates with Amazon Lex to provide a JavaScript plugin that can incorporate an Amazon Lex-powered chat widget into your current internet software. On this case, we use the online UI to emulate an current buyer internet software with an embedded Amazon Lex chatbot. Full the next steps:

- Comply with the directions to deploy the Amazon Lex internet UI CloudFormation stack.

- On the AWS CloudFormation console, navigate to the stack’s Outputs tab and find the worth for

SnippetUrl.

- Copy the online UI Iframe snippet, which can resemble the format underneath Including the ChatBot UI to your Web site as an Iframe.

- Edit your forked model of the Amplify GitHub supply repository by including your internet UI JavaScript plugin to the part labeled

<-- Paste your Lex Net UI JavaScript plugin right here -->for every of the HTML recordsdata underneath the front-end listing:index.html,contact.html, andabout.html.

Amplify offers an automatic construct and launch pipeline that triggers based mostly on new commits to your forked repository and publishes the brand new model of your web site to your Amplify area. You’ll be able to view the deployment standing on the Amplify console.

Entry the Amplify web site

Along with your Amazon Lex internet UI JavaScript plugin in place, you are actually able to launch your Amplify demo web site.

- To entry your web site’s area, navigate to the CloudFormation stack’s Outputs tab and find the Amplify area URL. Alternatively, use the next command:

- After you entry your Amplify area URL, you possibly can proceed with testing and validation.

Testing and validation

The next testing process goals to confirm that the agent appropriately identifies and understands consumer intents for accessing buyer knowledge (reminiscent of account info), fulfilling enterprise workflows by predefined intents (reminiscent of finishing a mortgage software), and answering normal queries, reminiscent of the next pattern prompts:

- Why ought to I exploit <your-company>?

- How aggressive are their charges?

- Which sort of mortgage ought to I exploit?

- What are present mortgage tendencies?

- How a lot do I would like saved for a down cost?

- What different prices will I pay at closing?

Response accuracy is decided by evaluating the relevancy, coherency, and human-like nature of the solutions generated by the Amazon Bedrock offered Anthropic Claude 2.1 FM. The supply hyperlinks supplied with every response (for instance, <your-company>.com based mostly on the Amazon Kendra Net Crawler configuration) must also be confirmed as credible.

Present personalised responses

Confirm the agent efficiently accesses and makes use of related buyer info in DynamoDB to tailor user-specific responses.

Observe that the usage of PIN authentication inside the agent is for demonstration functions solely and shouldn’t be utilized in any manufacturing implementation.

Curate opinionated solutions

Validate that opinionated questions are met with credible solutions by the agent appropriately sourcing replies based mostly on authoritative buyer paperwork and webpages listed by Amazon Kendra.

Ship contextual era

Decide the agent’s skill to offer contextually related responses based mostly on earlier chat historical past.

Entry normal data

Affirm the agent’s entry to normal data info for non-customer-specific, non-opinionated queries that require correct and coherent responses based mostly on Amazon Bedrock FM coaching knowledge and RAG.

Run predefined intents

Make sure the agent appropriately interprets and conversationally fulfills consumer prompts which are supposed to be routed to predefined intents, reminiscent of finishing a mortgage software as a part of a enterprise workflow.

The next is the resultant mortgage software doc accomplished by the conversational circulation.

The multi-channel assist performance might be examined at the side of the previous evaluation measures throughout internet, SMS, and voice channels. For extra details about integrating the chatbot with different providers, consult with Integrating an Amazon Lex V2 bot with Twilio SMS and Add an Amazon Lex bot to Amazon Join.

Clear up

To keep away from costs in your AWS account, clear up the answer’s provisioned assets.

- Revoke the GitHub private entry token. GitHub PATs are configured with an expiration worth. If you wish to make sure that your PAT can’t be used for programmatic entry to your forked Amplify GitHub repository earlier than it reaches its expiry, you possibly can revoke the PAT by following the GitHub repo’s directions.

- Delete the GenAI-FSI-Agent.yml CloudFormation stack and different answer assets utilizing the answer deletion automation script. The next instructions use the default stack identify. In case you personalized the stack identify, alter the instructions accordingly.

# export STACK_NAME=<YOUR-STACK-NAME>./delete-stack.sh

Resolution Deletion Automation Script

The

delete-stack.sh shellscript deletes the assets that had been initially provisioned utilizing the answer deployment automation script, together with the GenAI-FSI-Agent.yml CloudFormation stack.

Concerns

Though the answer on this put up showcases the capabilities of a generative AI monetary providers agent powered by Amazon Bedrock, it’s important to acknowledge that this answer isn’t production-ready. Reasonably, it serves as an illustrative instance for builders aiming to create personalised conversational brokers for various purposes like digital staff and buyer assist programs. A developer’s path to manufacturing would iterate on this pattern answer with the next issues.

Safety and privateness

Guarantee knowledge safety and consumer privateness all through the implementation course of. Implement applicable entry controls and encryption mechanisms to guard delicate info. Options just like the generative AI monetary providers agent will profit from knowledge that isn’t but obtainable to the underlying FM, which regularly means it would be best to use your individual non-public knowledge for the largest soar in functionality. Take into account the next finest practices:

- Preserve it secret, preserve it protected – You want this knowledge to remain fully protected, safe, and personal in the course of the generative course of, and need management over how this knowledge is shared and used.

- Set up utilization guardrails – Perceive how knowledge is utilized by a service earlier than making it obtainable to your groups. Create and distribute the foundations for what knowledge can be utilized with what service. Make these clear to your groups to allow them to transfer rapidly and prototype safely.

- Contain Authorized, sooner moderately than later – Have your Authorized groups overview the phrases and circumstances and repair playing cards of the providers you propose to make use of earlier than you begin operating any delicate knowledge by them. Your Authorized companions have by no means been extra essential than they’re in the present day.

For example of how we’re excited about this at AWS with Amazon Bedrock: All knowledge is encrypted and doesn’t depart your VPC, and Amazon Bedrock makes a separate copy of the bottom FM that’s accessible solely to the shopper, and advantageous tunes or trains this non-public copy of the mannequin.

Consumer acceptance testing

Conduct consumer acceptance testing (UAT) with actual customers to guage the efficiency, usability, and satisfaction of the generative AI monetary providers agent. Collect suggestions and make vital enhancements based mostly on consumer enter.

Deployment and monitoring

Deploy the absolutely examined agent on AWS, and implement monitoring and logging to trace its efficiency, establish points, and optimize the system as wanted. Lambda monitoring and troubleshooting options are enabled by default for the agent’s Lambda handler.

Upkeep and updates

Usually replace the agent with the newest FM variations and knowledge to boost its accuracy and effectiveness. Monitor customer-specific knowledge in DynamoDB and synchronize your Amazon Kendra knowledge supply indexing as wanted.

Conclusion

On this put up, we delved into the thrilling world of generative AI brokers and their skill to facilitate human-like interactions by the orchestration of calls to FMs and different complementary instruments. By following this information, you should use Bedrock, LangChain, and current buyer assets to efficiently implement, check, and validate a dependable agent that gives customers with correct and personalised monetary help by pure language conversations.

In an upcoming put up, we’ll reveal how the identical performance might be delivered utilizing an alternate strategy with Brokers for Amazon Bedrock and Information base for Amazon Bedrock. This absolutely AWS-managed implementation will additional discover the best way to supply clever automation and knowledge search capabilities by personalised brokers that rework the way in which customers work together together with your purposes, making interactions extra pure, environment friendly, and efficient.

In regards to the writer

Kyle T. Blocksom is a Sr. Options Architect with AWS based mostly in Southern California. Kyle’s ardour is to convey folks collectively and leverage know-how to ship options that prospects love. Outdoors of labor, he enjoys browsing, consuming, wrestling together with his canine, and spoiling his niece and nephew.

Kyle T. Blocksom is a Sr. Options Architect with AWS based mostly in Southern California. Kyle’s ardour is to convey folks collectively and leverage know-how to ship options that prospects love. Outdoors of labor, he enjoys browsing, consuming, wrestling together with his canine, and spoiling his niece and nephew.