Researchers from the Peking College and Alibaba Group launched FastV to handle the challenges attributable to inefficient consideration computation in Giant Imaginative and prescient-Language Fashions (LVLMs). Current fashions corresponding to LLaVA-1.5 and Video-LLaVA have proven important developments in LVLMs however they wrestle with the bottleneck within the consideration mechanism, regarding the dealing with of visible tokens. The researchers revealed that the eye mechanism inside LVLMs reveals a bias in the direction of textual tokens, leading to inefficient utilization of visible info.

At the moment, LVLMs course of multimodal inputs by remodeling photographs into tokens and feeding them alongside textual tokens into the transformer-based decoder. Researchers recognized the problem with the visible tokens, which represent a considerable portion of enter information, receiving disproportionately decrease consideration scores in comparison with textual tokens, particularly within the deeper layers of LVLMs. This inefficiency results in suboptimal utilization of visible info and hampers the general efficiency and computational effectivity of LVLMs. To deal with this, they suggest FastV, a dynamic pruning methodology designed to optimize computational effectivity in LVLMs. FastV dynamically prunes pointless visible tokens primarily based on their consideration scores, considerably lowering computational prices with out compromising efficiency in quite a lot of vision-language duties.

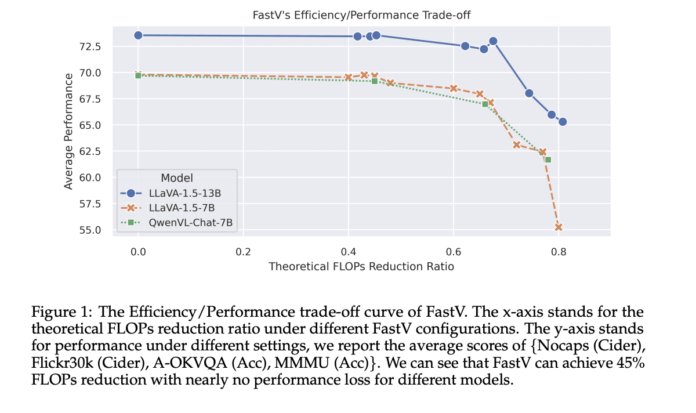

The proposed mannequin, FastV, operates by introducing a dynamic pruning mechanism for visible tokens in the course of the inference part of LVLMs. It ranks the significance of visible tokens primarily based on their consideration scores and selectively prunes out much less related tokens past a sure layer. This selective pruning technique considerably reduces the computational burden of LVLMs, significantly in deep layers, the place the eye mechanism tends to allocate fewer sources to visible tokens. By leveraging this perception, FastV achieves a considerable discount in FLOPs whereas sustaining superior efficiency throughout varied vision-language duties.

FastV’s flexibility permits customers to customise the trade-off between computational effectivity and efficiency in response to their particular necessities, making it a flexible and sensible resolution for deploying LVLMs in resource-constrained environments. FastV has proven important effectiveness in exactly concentrating on picture tokens for discount, thereby optimizing efficiency with out compromising the mannequin’s general performance.

In conclusion, the proposed mannequin addresses the inefficiency of consideration computation in LVLMs, significantly regarding the dealing with of visible tokens. FastV demonstrates exceptional efficiency in lowering computational prices with out sacrificing the standard of output throughout a variety of vision-language duties. General, FastV represents a big step in the direction of bettering the computational effectivity and sensible deployment of LVLMs, providing a promising resolution to the challenges posed by useful resource constraints in real-world purposes.

Try the Paper and Github. All credit score for this analysis goes to the researchers of this challenge. Additionally, don’t neglect to observe us on Twitter. Be part of our Telegram Channel, Discord Channel, and LinkedIn Group.

Should you like our work, you’ll love our publication..

Don’t Overlook to affix our 38k+ ML SubReddit

Wish to get in entrance of 1.5 Million AI fanatics? Work with us right here

Pragati Jhunjhunwala is a consulting intern at MarktechPost. She is presently pursuing her B.Tech from the Indian Institute of Know-how(IIT), Kharagpur. She is a tech fanatic and has a eager curiosity within the scope of software program and information science purposes. She is all the time studying concerning the developments in several discipline of AI and ML.