Generative AI has opened up a whole lot of potential within the discipline of AI. We’re seeing quite a few makes use of, together with textual content technology, code technology, summarization, translation, chatbots, and extra. One such space that’s evolving is utilizing pure language processing (NLP) to unlock new alternatives for accessing knowledge by means of intuitive SQL queries. As an alternative of coping with advanced technical code, enterprise customers and knowledge analysts can ask questions associated to knowledge and insights in plain language. The first objective is to routinely generate SQL queries from pure language textual content. To do that, the textual content enter is reworked right into a structured illustration, and from this illustration, a SQL question that can be utilized to entry a database is created.

On this publish, we offer an introduction to textual content to SQL (Text2SQL) and discover use circumstances, challenges, design patterns, and finest practices. Particularly, we focus on the next:

- Why do we’d like Text2SQL

- Key parts for Textual content to SQL

- Immediate engineering concerns for pure language or Textual content to SQL

- Optimizations and finest practices

- Structure patterns

Why do we’d like Text2SQL?

Right this moment, a considerable amount of knowledge is offered in conventional knowledge analytics, knowledge warehousing, and databases, which can be not straightforward to question or perceive for almost all of group members. The first objective of Text2SQL is to make querying databases extra accessible to non-technical customers, who can present their queries in pure language.

NLP SQL allows enterprise customers to investigate knowledge and get solutions by typing or talking questions in pure language, similar to the next:

- “Present whole gross sales for every product final month”

- “Which merchandise generated extra income?”

- “What proportion of consumers are from every area?”

Amazon Bedrock is a completely managed service that gives a alternative of high-performing basis fashions (FMs) through a single API, enabling to simply construct and scale Gen AI functions. It may be leveraged to generate SQL queries based mostly on questions much like those listed above and question organizational structured knowledge and generate pure language responses from the question response knowledge.

Key parts for textual content to SQL

Textual content-to-SQL programs contain a number of levels to transform pure language queries into runnable SQL:

- Pure language processing:

- Analyze the person’s enter question

- Extract key components and intent

- Convert to a structured format

- SQL technology:

- Map extracted particulars into SQL syntax

- Generate a sound SQL question

- Database question:

- Run the AI-generated SQL question on the database

- Retrieve outcomes

- Return outcomes to the person

One exceptional functionality of Massive Language Fashions (LLMs) is technology of code, together with Structured Question Language (SQL) for databases. These LLMs could be leveraged to grasp the pure language query and generate a corresponding SQL question as an output. The LLMs will profit by adopting in-context studying and fine-tuning settings as extra knowledge is supplied.

The next diagram illustrates a primary Text2SQL circulate.

Immediate engineering concerns for pure language to SQL

The immediate is essential when utilizing LLMs to translate pure language into SQL queries, and there are a number of essential concerns for immediate engineering.

Efficient immediate engineering is essential to growing pure language to SQL programs. Clear, simple prompts present higher directions for the language mannequin. Offering context that the person is requesting a SQL question together with related database schema particulars allows the mannequin to translate the intent precisely. Together with a couple of annotated examples of pure language prompts and corresponding SQL queries helps information the mannequin to supply syntax-compliant output. Moreover, incorporating Retrieval Augmented Era (RAG), the place the mannequin retrieves related examples throughout processing, additional improves the mapping accuracy. Effectively-designed prompts that give the mannequin adequate instruction, context, examples, and retrieval augmentation are essential for reliably translating pure language into SQL queries.

The next is an instance of a baseline immediate with code illustration of the database from the whitepaper Enhancing Few-shot Textual content-to-SQL Capabilities of Massive Language Fashions: A Examine on Immediate Design Methods.

As illustrated on this instance, prompt-based few-shot studying supplies the mannequin with a handful of annotated examples within the immediate itself. This demonstrates the goal mapping between pure language and SQL for the mannequin. Sometimes, the immediate would comprise round 2–3 pairs exhibiting a pure language question and the equal SQL assertion. These few examples information the mannequin to generate syntax-compliant SQL queries from pure language with out requiring intensive coaching knowledge.

Nice-tuning vs. immediate engineering

When constructing pure language to SQL programs, we regularly get into the dialogue of if fine-tuning the mannequin is the correct approach or if efficient immediate engineering is the way in which to go. Each approaches may very well be thought of and chosen based mostly on the correct set of necessities:

-

- Nice-tuning – The baseline mannequin is pre-trained on a big basic textual content corpus after which can use instruction-based fine-tuning, which makes use of labeled examples to enhance the efficiency of a pre-trained basis mannequin on text-SQL. This adapts the mannequin to the goal process. Nice-tuning straight trains the mannequin on the top process however requires many text-SQL examples. You should utilize supervised fine-tuning based mostly in your LLM to enhance the effectiveness of text-to-SQL. For this, you need to use a number of datasets like Spider, WikiSQL, CHASE, BIRD-SQL, or CoSQL.

- Immediate engineering – The mannequin is skilled to finish prompts designed to immediate the goal SQL syntax. When producing SQL from pure language utilizing LLMs, offering clear directions within the immediate is essential for controlling the mannequin’s output. Within the immediate to annotate completely different parts like pointing to columns, schema after which instruct which kind of SQL to create. These act like directions that inform the mannequin the best way to format the SQL output. The next immediate reveals an instance the place you level desk columns and instruct to create a MySQL question:

An efficient strategy for text-to-SQL fashions is to first begin with a baseline LLM with none task-specific fine-tuning. Effectively-crafted prompts can then be used to adapt and drive the bottom mannequin to deal with the text-to-SQL mapping. This immediate engineering means that you can develop the aptitude without having to do fine-tuning. If immediate engineering on the bottom mannequin doesn’t obtain adequate accuracy, fine-tuning on a small set of text-SQL examples can then be explored together with additional immediate engineering.

The mixture of fine-tuning and immediate engineering could also be required if immediate engineering on the uncooked pre-trained mannequin alone doesn’t meet necessities. Nonetheless, it’s finest to initially try immediate engineering with out fine-tuning, as a result of this enables speedy iteration with out knowledge assortment. If this fails to offer sufficient efficiency, fine-tuning alongside immediate engineering is a viable subsequent step. This total strategy maximizes effectivity whereas nonetheless permitting customization if purely prompt-based strategies are inadequate.

Optimization and finest practices

Optimization and finest practices are important for enhancing effectiveness and guaranteeing sources are used optimally and the correct outcomes are achieved in the easiest way attainable. The methods assist in bettering efficiency, controlling prices, and attaining a better-quality consequence.

When growing text-to-SQL programs utilizing LLMs, optimization methods can enhance efficiency and effectivity. The next are some key areas to contemplate:

- Caching – To enhance latency, price management, and standardization, you possibly can cache the parsed SQL and acknowledged question prompts from the text-to-SQL LLM. This avoids reprocessing repeated queries.

- Monitoring – Logs and metrics round question parsing, immediate recognition, SQL technology, and SQL outcomes needs to be collected to watch the text-to-SQL LLM system. This supplies visibility for the optimization instance updating the immediate or revisiting the fine-tuning with an up to date dataset.

- Materialized views vs. tables – Materialized views can simplify SQL technology and enhance efficiency for widespread text-to-SQL queries. Querying tables straight might end in advanced SQL and likewise end in efficiency points, together with fixed creation of efficiency methods like indexes. Moreover, you possibly can keep away from efficiency points when the identical desk is used for different areas of utility on the identical time.

- Refreshing knowledge – Materialized views have to be refreshed on a schedule to maintain knowledge present for text-to-SQL queries. You should utilize batch or incremental refresh approaches to steadiness overhead.

- Central knowledge catalog – Making a centralized knowledge catalog supplies a single pane of glass view to a corporation’s knowledge sources and can assist LLMs choose acceptable tables and schemas with a purpose to present extra correct responses. Vector embeddings created from a central knowledge catalog could be equipped to an LLM together with data requested to generate related and exact SQL responses.

By making use of optimization finest practices like caching, monitoring, materialized views, scheduled refreshing, and a central catalog, you possibly can considerably enhance the efficiency and effectivity of text-to-SQL programs utilizing LLMs.

Structure patterns

Let’s have a look at some structure patterns that may be applied for a textual content to SQL workflow.

Immediate engineering

The next diagram illustrates the structure for producing queries with an LLM utilizing immediate engineering.

On this sample, the person creates prompt-based few-shot studying that gives the mannequin with annotated examples within the immediate itself, which incorporates the desk and schema particulars and a few pattern queries with its outcomes. The LLM makes use of the supplied immediate to return again the AI-generated SQL, which is validated after which run towards the database to get the outcomes. That is probably the most simple sample to get began utilizing immediate engineering. For this, you need to use Amazon Bedrock or basis fashions in Amazon SageMaker JumpStart.

On this sample, the person creates a prompt-based few-shot studying that gives the mannequin with annotated examples within the immediate itself, which incorporates the desk and schema particulars and a few pattern queries with its outcomes. The LLM makes use of the supplied immediate to return again the AI generated SQL which is validated and run towards the database to get the outcomes. That is probably the most simple sample to get began utilizing immediate engineering. For this, you need to use Amazon Bedrock which is a completely managed service that gives a alternative of high-performing basis fashions (FMs) from main AI firms through a single API, together with a broad set of capabilities you might want to construct generative AI functions with safety, privateness, and accountable AI or JumpStart Basis Fashions which gives state-of-the-art basis fashions to be used circumstances similar to content material writing, code technology, query answering, copywriting, summarization, classification, data retrieval, and extra

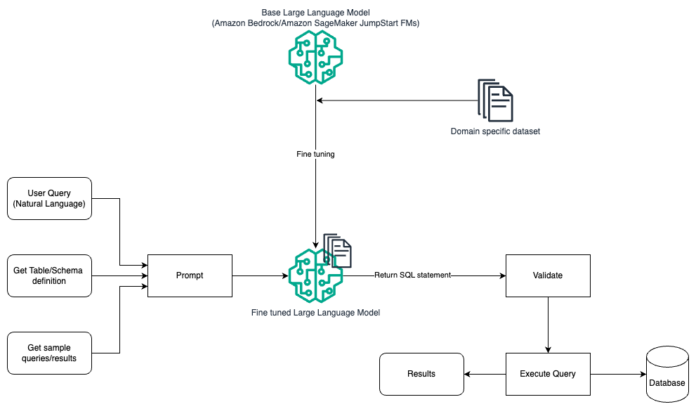

Immediate engineering and fine-tuning

The next diagram illustrates the structure for producing queries with an LLM utilizing immediate engineering and fine-tuning.

This circulate is much like the earlier sample, which principally depends on immediate engineering, however with a further circulate of fine-tuning on the domain-specific dataset. The fine-tuned LLM is used to generate the SQL queries with minimal in-context worth for the immediate. For this, you need to use SageMaker JumpStart to fine-tune an LLM on a domain-specific dataset in the identical approach you’ll prepare and deploy any mannequin on Amazon SageMaker.

Immediate engineering and RAG

The next diagram illustrates the structure for producing queries with an LLM utilizing immediate engineering and RAG.

On this sample, we use Retrieval Augmented Era utilizing vector embeddings shops, like Amazon Titan Embeddings or Cohere Embed, on Amazon Bedrock from a central knowledge catalog, like AWS Glue Information Catalog, of databases inside a corporation. The vector embeddings are saved in vector databases like Vector Engine for Amazon OpenSearch Serverless, Amazon Relational Database Service (Amazon RDS) for PostgreSQL with the pgvector extension, or Amazon Kendra. LLMs use the vector embeddings to pick out the correct database, tables, and columns from tables sooner when creating SQL queries. Utilizing RAG is useful when knowledge and related data that have to be retrieved by LLMs are saved in a number of separate database programs and the LLM wants to have the ability to search or question knowledge from all these completely different programs. That is the place offering vector embeddings of a centralized or unified knowledge catalog to the LLMs ends in extra correct and complete data returned by the LLMs.

Conclusion

On this publish, we mentioned how we will generate worth from enterprise knowledge utilizing pure language to SQL technology. We seemed into key parts, optimization, and finest practices. We additionally discovered structure patterns from primary immediate engineering to fine-tuning and RAG. To be taught extra, discuss with Amazon Bedrock to simply construct and scale generative AI functions with basis fashions

In regards to the Authors

Randy DeFauw is a Senior Principal Options Architect at AWS. He holds an MSEE from the College of Michigan, the place he labored on pc imaginative and prescient for autonomous automobiles. He additionally holds an MBA from Colorado State College. Randy has held quite a lot of positions within the know-how area, starting from software program engineering to product administration. In entered the Huge Information area in 2013 and continues to discover that space. He’s actively engaged on tasks within the ML area and has offered at quite a few conferences together with Strata and GlueCon.

Randy DeFauw is a Senior Principal Options Architect at AWS. He holds an MSEE from the College of Michigan, the place he labored on pc imaginative and prescient for autonomous automobiles. He additionally holds an MBA from Colorado State College. Randy has held quite a lot of positions within the know-how area, starting from software program engineering to product administration. In entered the Huge Information area in 2013 and continues to discover that space. He’s actively engaged on tasks within the ML area and has offered at quite a few conferences together with Strata and GlueCon.

Nitin Eusebius is a Sr. Enterprise Options Architect at AWS, skilled in Software program Engineering, Enterprise Structure, and AI/ML. He’s deeply enthusiastic about exploring the probabilities of generative AI. He collaborates with clients to assist them construct well-architected functions on the AWS platform, and is devoted to fixing know-how challenges and aiding with their cloud journey.

Nitin Eusebius is a Sr. Enterprise Options Architect at AWS, skilled in Software program Engineering, Enterprise Structure, and AI/ML. He’s deeply enthusiastic about exploring the probabilities of generative AI. He collaborates with clients to assist them construct well-architected functions on the AWS platform, and is devoted to fixing know-how challenges and aiding with their cloud journey.

Arghya Banerjee is a Sr. Options Architect at AWS within the San Francisco Bay Space centered on serving to clients undertake and use AWS Cloud. Arghya is targeted on Huge Information, Information Lakes, Streaming, Batch Analytics and AI/ML providers and applied sciences.

Arghya Banerjee is a Sr. Options Architect at AWS within the San Francisco Bay Space centered on serving to clients undertake and use AWS Cloud. Arghya is targeted on Huge Information, Information Lakes, Streaming, Batch Analytics and AI/ML providers and applied sciences.