The event of huge language fashions (LLMs) like GPT and LLaMA has marked a major milestone. These fashions have turn out to be indispensable instruments for varied pure language processing duties. Nonetheless, creating these fashions from scratch includes appreciable prices, immense computational sources, and substantial power consumption. This has led to an growing curiosity in growing cost-effective alternate options. One such progressive method is the fusion of present pre-trained LLMs right into a stronger and environment friendly mannequin. This technique not solely presents a discount in useful resource expenditure but in addition harnesses the collective strengths of varied fashions.

Merging a number of LLMs is difficult, primarily as a result of their range in structure. Merely mixing their weights just isn’t possible, necessitating a extra nuanced method. The purpose of data fusion in LLMs is to amalgamate these fashions to create a brand new, extra highly effective one, thereby maximizing the strengths and minimizing the prices related to particular person fashions. This fusion methodology has the potential to boost efficiency throughout a spectrum of duties, offering a flexible software adaptable for varied purposes.

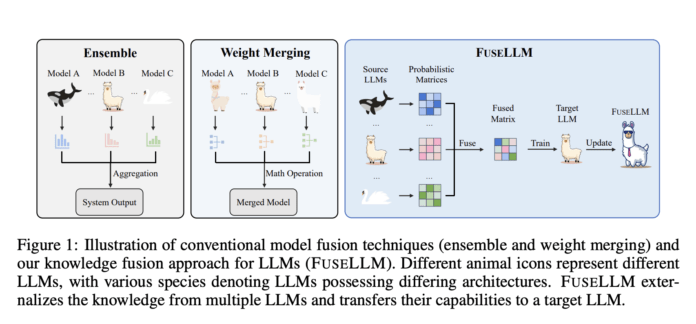

The traditional strategies for integrating language fashions usually contain ensemble methods and weight merging. Ensemble strategies, which combination outputs from a number of fashions, face sensible challenges with LLMs as a result of their massive reminiscence and time necessities. Weight merging, however, typically fails to yield optimum outcomes when utilized to fashions with vital variations of their parameter areas. These limitations necessitate a distinct method to mix the capabilities of varied LLMs successfully.

The researchers from Solar Yat-sen College and Tencent AI Lab launched a groundbreaking idea – information fusion for LLMs in response to the abovementioned challenges. This methodology leverages the generative distributions of supply LLMs, externalizing their information and strengths and transferring them to a goal LLM by way of light-weight continuous coaching. The core of this method lies in aligning and fusing the probabilistic distributions generated by the supply LLMs. This course of includes growing new methods for aligning tokenizations and exploring strategies for fusing chance distributions. A major emphasis is positioned on minimizing the divergence between the probabilistic distributions of the goal and supply LLMs.

Implementing this system is intricate, necessitating an in depth alignment of tokenizations throughout completely different LLMs. That is essential for the efficient fusion of data, because it ensures correct mapping of probabilistic distribution matrices. The fusion course of includes evaluating the standard of various LLMs and assigning various ranges of significance to their respective distribution matrices based mostly on their prediction high quality. This nuanced method permits the fused mannequin to make the most of the collective information whereas preserving the distinctive strengths of every supply LLM.

The efficiency of FuseLLM was rigorously examined utilizing three well-liked open-source LLMs with distinct architectures: Llama-2, MPT, and OpenLLaMA. The analysis encompassed varied benchmarks, together with reasoning, commonsense, and code technology duties. The outcomes had been exceptional, with the fused mannequin outperforming every supply LLM and the baseline in most duties. The examine demonstrated substantial enhancements in varied capabilities, highlighting the effectiveness of FuseLLM in integrating the collective strengths of particular person LLMs.

The analysis presents a number of key insights:

- FuseLLM presents an efficient methodology for LLM fusion, surpassing conventional ensemble and weight-merging methods.

- The fused mannequin showcases superior capabilities in reasoning, commonsense, and code technology duties.

- The method opens up new potentialities for growing highly effective and environment friendly LLMs by leveraging present fashions.

In conclusion, learning information fusion in LLMs introduces a pioneering method to growing language fashions. By combining the capabilities of various LLMs, this methodology presents a effective answer to the challenges of resource-intensive mannequin coaching. The findings from this analysis exhibit the effectiveness of the FuseLLM method and pave the way in which for future developments in pure language processing.

Take a look at the Paper and Github. All credit score for this analysis goes to the researchers of this undertaking. Additionally, don’t overlook to comply with us on Twitter. Be part of our 36k+ ML SubReddit, 41k+ Fb Neighborhood, Discord Channel, and LinkedIn Group.

When you like our work, you’ll love our publication..

Don’t Neglect to hitch our Telegram Channel

Hey, My identify is Adnan Hassan. I’m a consulting intern at Marktechpost and shortly to be a administration trainee at American Categorical. I’m presently pursuing a twin diploma on the Indian Institute of Know-how, Kharagpur. I’m captivated with know-how and need to create new merchandise that make a distinction.