Lately, GPT-4 and different Massive Language Fashions (LLMs) have demonstrated a formidable capability for Pure Language Processing (NLP) to memorize in depth quantities of knowledge, presumably much more so than people. The success of LLMs in coping with huge quantities of information has led to the event of fashions of the generative processes which might be extra transient, coherent, and interpretable—a “world mannequin,” if you’ll.

Extra insights are gained from LLMs’ capability to understand and management intricate strategic contexts; for instance, earlier analysis has proven that transformers educated to foretell the following token in board video games like Othello create detailed fashions of the present recreation state. Researchers have found the power of LLMs to be taught representations that replicate perceptual and symbolic notions and observe topics’ boolean states inside sure conditions. With this two-pronged functionality, LLMs can retailer huge quantities of information and arrange it in ways in which mimic human thought processes, making them preferrred data bases.

Factual fallacies, the potential of creating dangerous content material, and out-of-date data are a number of the limitations of LLMs resulting from their coaching limits. It would take money and time to retrain everybody to repair these issues. In response, there was a proliferation of LLM-centric data enhancing approaches in recent times, permitting for environment friendly, on-the-fly mannequin tweaks. Understanding how LLMs show and course of data is essential for guaranteeing the equity and security of Synthetic Intelligence (AI) programs; this system focuses on particular areas for change with out affecting total efficiency. The first purpose of this work is to survey the historical past and present state of data enhancing for LLMs.

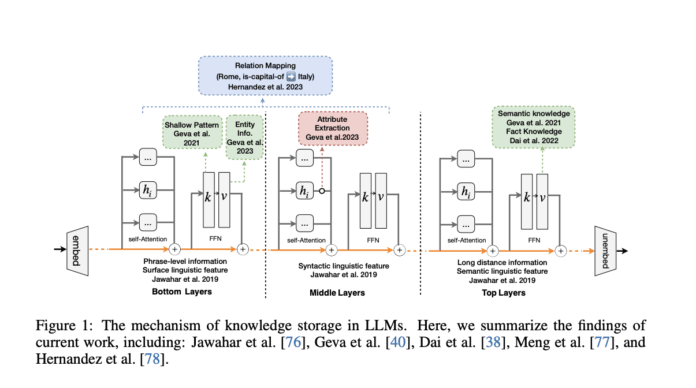

New analysis by a group of researchers from Zhejiang College, the Nationwide College of Singapore, the College of California, Ant Group, and Alibaba Group gives the preliminary step to offer an outline of Transformers’ design, the best way LLMs retailer data, and associated approaches comparable to parameter-efficient fine-tuning, data augmentation, persevering with studying, and machine unlearning. After that, the group lays out the groundwork, formally defines the data enhancing drawback, and gives a brand new taxonomy that brings collectively theories from training and cognitive science to supply a coherent perspective on data enhancing strategies. Specifically, they classify data enhancing methods for LLMs as follows: enhancing inner data strategies, merging data into the mannequin, and resorting to exterior data.

The researchers current their classification standards of their paper as follows:

- Drawing on Info from Different Sources: This methodology is analogous to the popularity part of human cognition, which, upon preliminary encounter with new data, requires publicity to the data inside an applicable context.

- Integrating Experiential Knowledge Into The Mannequin: By drawing parallels between the incoming data and the mannequin’s present data, this methodology is much like the affiliation part in human cognitive processes. A discovered data illustration could be mixed with or used rather than the output or intermediate output by the strategies.

- Revising Inherent Info: Revising data on this approach is much like going by the “mastery part” of studying one thing new. It entails the mannequin constantly utilizing LLM weight modifications to include data into its parameters.

Subsequently, twelve pure language processing datasets are subjected to thorough experiments on this article. The efficiency, usability, underlying mechanisms, and different points are rigorously thought of of their design.

To supply a good comparability and present how effectively these strategies work in data insertion, modification, and erasure settings, the researchers construct a brand new benchmark referred to as KnowEdit and describe the empirical outcomes of state-of-the-art LLM data enhancing strategies.

The researchers exhibit how data enhancing impacts each basic duties and multi-task data enhancing, suggesting that fashionable strategies of data enhancing efficiently replace info with little affect on the mannequin’s cognitive skills and adaptableness in several data domains. In altered LLMs, they discover that a number of columns within the worth layer are closely centered. It has been recommended that LLMs could also be retrieving solutions by retrieving data from their pre-training corpus or by a multi-step reasoning course of.

The findings recommend that knowledge-locating processes, comparable to causal evaluation, concentrate on areas associated to the entity in query relatively than the whole factual context. Moreover, the group additionally explores the potential for data enhancing for LLMs to have unexpected repercussions, which is a crucial aspect to consider completely.

Lastly, they discover the huge array of makes use of for data enhancing, its prospects from a number of angles. These makes use of embrace reliable AI, environment friendly machine studying, AI-generated content material (AIGC), and individualized brokers in human-computer interplay. The researchers hope this examine could spark new strains of inquiry into LLMs with a watch towards effectivity and creativity. They’ve launched all of their sources—together with codes, knowledge splits, and educated mannequin checkpoints—to the general public to facilitate and encourage extra examine.

Take a look at the Paper. All credit score for this analysis goes to the researchers of this challenge. Additionally, don’t overlook to comply with us on Twitter. Be a part of our 35k+ ML SubReddit, 41k+ Fb Neighborhood, Discord Channel, and LinkedIn Group.

Should you like our work, you’ll love our publication..

Dhanshree Shenwai is a Pc Science Engineer and has an excellent expertise in FinTech firms masking Monetary, Playing cards & Funds and Banking area with eager curiosity in functions of AI. She is obsessed with exploring new applied sciences and developments in right this moment’s evolving world making everybody’s life simple.