Growing basis fashions like Massive Language Fashions (LLMs), Imaginative and prescient Transformers (ViTs), and multimodal fashions marks a big milestone. These fashions, recognized for his or her versatility and flexibility, are reshaping the strategy in direction of AI functions. Nonetheless, the expansion of those fashions is accompanied by a substantial enhance in useful resource calls for, making their improvement and deployment a resource-intensive activity.

The first problem in deploying these basis fashions is their substantial useful resource necessities. The coaching and upkeep of fashions akin to LLaMa-270B contain immense computational energy and vitality, resulting in excessive prices and important environmental impacts. This resource-intensive nature limits their accessibility, confining the flexibility to coach and deploy these fashions to entities with substantial computational assets.

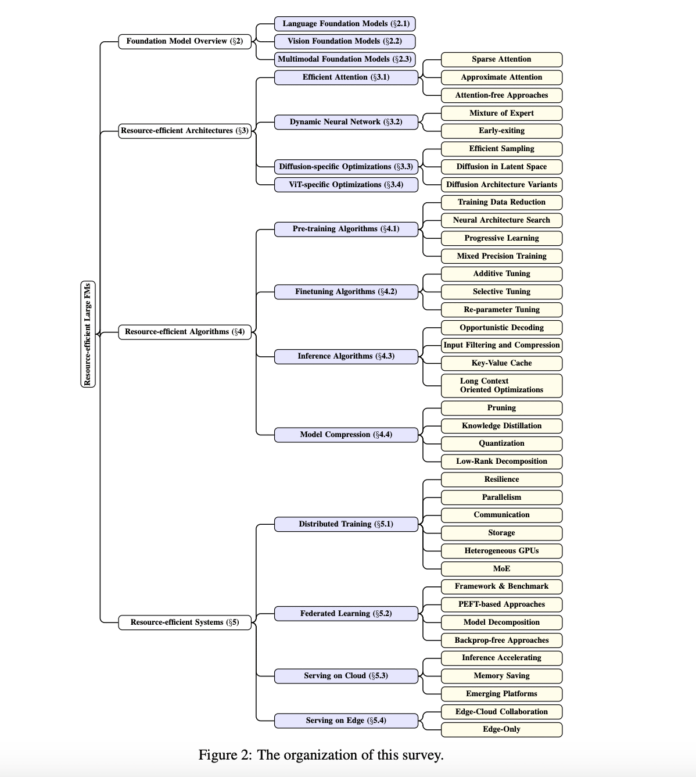

In response to the challenges of useful resource effectivity, important analysis efforts are directed towards creating extra resource-efficient methods. These efforts embody algorithm optimization, system-level improvements, and novel structure designs. The aim is to reduce the useful resource footprint with out compromising the fashions’ efficiency and capabilities. This consists of exploring varied strategies to optimize algorithmic effectivity, improve knowledge administration, and innovate system architectures to scale back the computational load.

The survey by researchers from Beijing College of Posts and Telecommunications, Peking College, and Tsinghua College delves into the evolution of language basis fashions, detailing their architectural developments and the downstream duties they carry out. It highlights the transformative influence of the Transformer structure, consideration mechanisms, and the encoder-decoder construction in language fashions. The survey additionally sheds gentle on speech basis fashions, which may derive significant representations from uncooked audio indicators, and their computational prices.

Imaginative and prescient basis fashions are one other focus space. Encoder-only architectures like ViT, DeiT, and SegFormer have considerably superior the sphere of pc imaginative and prescient, demonstrating spectacular leads to picture classification and segmentation. Regardless of their useful resource calls for, these fashions have pushed the boundaries of self-supervised pre-training in imaginative and prescient fashions.

A rising space of curiosity is multimodal basis fashions, which purpose to encode knowledge from totally different modalities right into a unified latent area. These fashions usually make use of transformer encoders for knowledge encoding or decoders for cross-modal technology. The survey discusses key architectures, akin to multi-encoder and encoder-decoder fashions, consultant fashions in cross-modal technology, and their value evaluation.

The doc presents an in-depth look into the present state and future instructions of resource-efficient algorithms and methods in basis fashions. It offers precious insights into varied methods employed to deal with the problems posed by these fashions’ massive useful resource footprint. The doc underscores the significance of continued innovation to make basis fashions extra accessible and sustainable.

Key takeaways from the survey embrace:

- Elevated useful resource calls for mark the evolution of basis fashions.

- Modern methods are being developed to reinforce the effectivity of those fashions.

- The aim is to reduce the useful resource footprint whereas sustaining efficiency.

- Efforts span throughout algorithm optimization, knowledge administration, and system structure innovation.

- The doc highlights the influence of those fashions in language, speech, and imaginative and prescient domains.

Take a look at the Paper. All credit score for this analysis goes to the researchers of this mission. Additionally, don’t neglect to comply with us on Twitter. Be part of our 36k+ ML SubReddit, 41k+ Fb Neighborhood, Discord Channel, and LinkedIn Group.

Should you like our work, you’ll love our publication..

Don’t Overlook to hitch our Telegram Channel

Hey, My title is Adnan Hassan. I’m a consulting intern at Marktechpost and shortly to be a administration trainee at American Categorical. I’m presently pursuing a twin diploma on the Indian Institute of Know-how, Kharagpur. I’m keen about expertise and need to create new merchandise that make a distinction.